一、重新设置主机名

**三台节点都要重新设置主机名**

hostnamectl set-hostname masterhostnamectl set-hostname slave1hostnamectl set-hostname slave2

二、关闭防火墙

2.1 关闭防火墙

systemctl stop firewalld

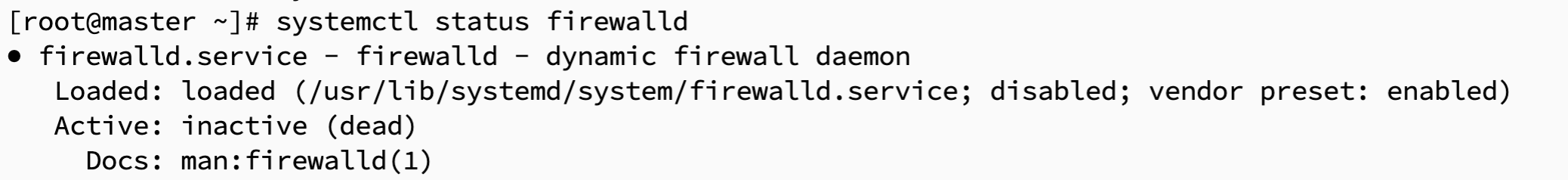

2.2 检查防火墙是否关闭

systemctl disable firewalld

2.3 检查防火墙是否关闭

systemctl status firewalld

三、设置IP映射

3.1 主节点配置 hosts 文件

vim /etc/hosts

3.2 把三台节点的ip地址和主机名添加进去

192.168.31.164 master

192.168.31.66 slave1

192.168.31.186 slave2

3.3 将主节点hosts文件分发到其他子节点

xsync /etc/hosts

四、配置免密登录

4.1每台节点上生成两个文件,一个公钥(id_rsa.pub),一个私钥(id_rsa)

ssh-keygen -t rsa

4.2 将公匙上传到主节点

**注意:在每台机器上都要输入**

ssh-copy-id master

在master主机上把authorized_keys 分发到slave1和slave2上

xsync ~/.ssh/authorized_keys

4.3 测试免密登录到其他节点

ssh master

ssh slave1

ssh slave2

五、安装 JDK

5.1 下载 JDK 安装包

...

5.2 解压下载的JDK安装包

tar -zxvf jdk-8u212-linux-x64.tar.gz -C /usr/local/src/

5.3 移动并重命名JDK包

mv /usr/local/src/jdk1.8.0_212 /usr/local/src/java

5.4 配置Java环境变量

vim /etc/profile

# JAVA_HOME

export JAVA_HOME=/usr/local/src/java

export PATH=$PATH:$JAVA_HOME/bin

export JRE_HOME=/usr/local/src/java/jre

export CLASSPATH=.:$CLASSPATH:$JAVA_HOME/lib:$JRE_HOME/lib

source /etc/profile

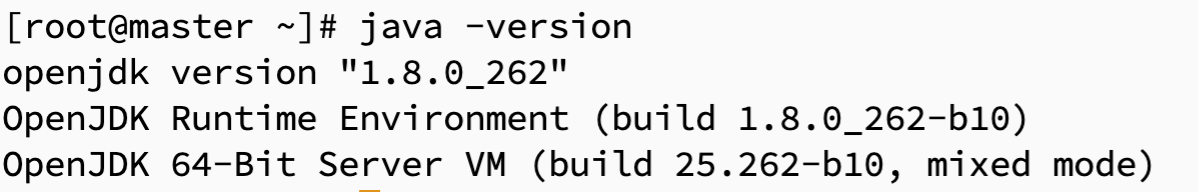

5.5 查看Java是否成功安装

java -version

六、安装Zookeeper

6.1 下载Zookeeper安装包

tar -zxvf apache-zookeeper-3.5.7-bin.tar.gz -C /usr/local/src/

6.2 解压Zookeeper安装包

tar -zxvf apache-zookeeper-3.5.7-bin.tar.gz -C /usr/local/src/

mv /usr/local/src/apache-zookeeper-3.5.7-bin /usr/local/src/zookeeper

6.3 配置Zookeeper环境变量

vim /etc/profile

#ZOOKEEPER

export ZOOKEEPER_HOME=/usr/local/src/zookeeper

export PATH=$PATH:$ZOOKEEPER_HOME/bin

source /etc/profile

6.4 创建配置文件zoo.cfg

cd /usr/local/src/zookeeper/conf

vim zoo.cfg

# The number of milliseconds of each tick

tickTime=2000

# The number of ticks that the initial

# synchronization phase can take

initLimit=10

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

syncLimit=5

# the directory where the snapshot is stored.

# do not use /tmp for storage, /tmp here is just

# example sakes.

dataDir=/usr/local/src/zookeeper/data

# the port at which the clients will connect

clientPort=2181

# the maximum number of client connections.

# increase this if you need to handle more clients

#maxClientCnxns=60

#

# Be sure to read the maintenance section of the

# administrator guide before turning on autopurge.

#

# http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance

#

# The number of snapshots to retain in dataDir

#autopurge.snapRetainCount=3

# Purge task interval in hours

# Set to "0" to disable auto purge feature

#autopurge.purgeInterval=1

server.1=master:2888:3888

server.2=slave1:2888:3888

server.3=slave2:2888:3888

6.5 创建 data 文件夹和 myid 文件

cd /usr/local/src/zookeeper

mkdir -p data

cd /usr/local/src/zookeeper/data

echo "1" > myid

6.6 分发Zookeeper到其他节点

xsync /usr/local/src/zookeeper

七、安装Hadoop

7.1 下载Hadoop安装包

wget https://mirrors.tuna.tsinghua.edu.cn/apache/hadoop/common/hadoop-3.1.3/hadoop-3.1.3.tar.gz

7.2 解压Hadoop安装包

tar -zxvf hadoop-3.1.3.tar.gz -C /usr/local/src/

mv /usr/local/src/hadoop-3.1.3 /usr/local/src/hadoop

7.3 配置Hadoop环境变量

vim /etc/profile

# HADOOP_HOME

export HADOOP_HOME=/usr/local/src/hadoop/

export PATH=$PATH:$HADOOP_HOME/bin

export PATH=$PATH:$HADOOP_HOME/sbin

source /etc/profile

7.4 修改配置文件yarn-env.sh和 hadoop-env.sh

echo "export JAVA_HOME=/usr/local/src/java" >> /usr/local/src/hadoop/etc/hadoop/yarn-env.sh

echo "export JAVA_HOME=/usr/local/src/java" >> /usr/local/src/hadoop/etc/hadoop/hadoop-env.sh

echo "export HDFS_NAMENODE_USER=root" >> /usr/local/src/hadoop/etc/hadoop/hadoop-env.sh

echo "export HDFS_DATANODE_USER=root" >> /usr/local/src/hadoop/etc/hadoop/hadoop-env.sh

echo "export HDFS_SECONDARYNAMENODE_USER=root" >> /usr/local/src/hadoop/etc/hadoop/hadoop-env.sh

echo "export YARN_RESOURCEMANAGER_USER=root" >> /usr/local/src/hadoop/etc/hadoop/hadoop-env.sh

echo "export YARN_NODEMANAGER_USER=root">> /usr/local/src/hadoop/etc/hadoop/hadoop-env.sh

echo "export HDFS_JOURNALNODE_USER=root" >> /usr/local/src/hadoop/etc/hadoop/hadoop-env.sh

echo "export HDFS_ZKFC_USER=root" >> /usr/local/src/hadoop/etc/hadoop/hadoop-env.sh

echo "export HADOOP_SHELL_EXECNAME=root" >> /usr/local/src/hadoop/etc/hadoop/hadoop-env.sh

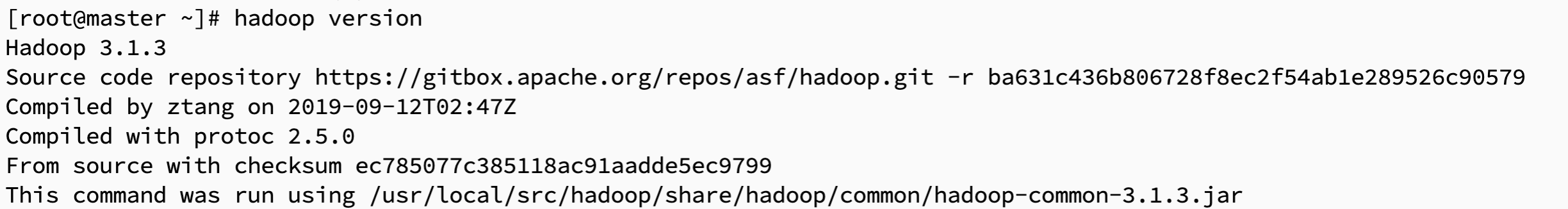

7.5 测试Hadoop是否安装成功

hadoop version

八、配置Hadoop

8.1 修改core-site.xml

vim /usr/local/src/hadoop/etc/hadoop/core-site.xml

<configuration>

<property>

<name>hadoop.proxyuser.root.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.root.groups</name>

<value>*</value>

</property>

<property>

<name>hadoop.http.staticuser.user</name>

<value>root</value>

</property>

<property>

<!-- 指定HDFS的通信地址,在NameNode HA中连接到nameservice的,

值为hacluster是需要在下一个hdfs-site.xml中配置的 -->

<name>fs.defaultFS</name>

<value>hdfs://hacluster</value>

</property>

<property>

<!-- 指定hadoop的临时目录,默认情况下NameNode和DataNode产生的数据都>会存放在该路径 -->

<name>hadoop.tmp.dir</name>

<value>/usr/local/src/hadoop/tmp</value>

</property>

<property>

<!-- 指定Zookeeper地址(2181端口参考zoo.cfg配置文件) -->

<name>ha.zookeeper.quorum</name>

<value>master:2181,slave1:2181,slave2:2181</value>

</property>

</configuration>

8.2 修改 hdfs-site.xml

vim /usr/local/src/hadoop/etc/hadoop/hdfs-site.xml

<configuration>

<!-- 关闭 HDFS 安全模式 -->

<property>

<name>dfs.safemode.threshold.pct</name>

<value>1</value>

</property>

<property>

<!-- 给NameNode集群定义一个services name(NameNode集群服务名) -->

<name>dfs.nameservices</name>

<value>hacluster</value>

</property>

<property>

<!-- 设置nameservices包含的NameNode名称,分别命名为nn1,nn2 -->

<name>dfs.ha.namenodes.hacluster</name>

<value>nn1,nn2</value>

</property>

<property>

<!-- 设置名为nn1的NameNode的RPC的通信地址和端口号 -->

<name>dfs.namenode.rpc-address.hacluster.nn1</name>

<value>master:9000</value>

</property>

<property>

<!-- 设置名为nn1的NameNode的HTTP的通信地址和端口号 -->

<name>dfs.namenode.http-address.hacluster.nn1</name>

<value>master:50070</value>

</property>

<property>

<!-- 设置名为nn2的NameNode的RPC的通信地址和端口号 -->

<name>dfs.namenode.rpc-address.hacluster.nn2</name>

<value>slave1:9000</value>

</property>

<property>

<!-- 设置名为nn2的NameNode的HTTP的通信地址和端口号 -->

<name>dfs.namenode.http-address.hacluster.nn2</name>

<value>slave1:50070</value>

</property>

<property>

<!-- 设置NameNode的元数据在JournalNode上的存放路径

(即NameNode集群间的用于共享的edits日志的journal节点列表) -->

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://master:8485;slave1:8485;slave2:8485/hacluster</value>

</property>

<property>

<!-- 指定JournalNode上存放的edits日志的目录位置 -->

<name>dfs.journalnode.edits.dir</name>

<value>/usr/local/src/hadoop/tmp/dfs/journal</value>

</property>

<property>

<!-- 开启NameNode失败自动切换 -->

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<property>

<!-- 配置NameNode失败后自动切换实现方式(客户端连接可用状态的NameNode所用的代理类) -->

<name>dfs.client.failover.proxy.provider.hacluster</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<property>

<!-- 配置隔离机制 -->

<name>dfs.ha.fencing.methods</name>

<value>sshfence</value>

</property>

<property>

<!-- 设置使用隔离机制时需要的SSH免密登录 -->

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/root/.ssh/id_rsa</value>

</property>

<property>

<!-- 设置HDFS副本数量 -->

<name>dfs.replication</name>

<value>3</value>

</property>

<property>

<!-- 设置NameNode存放的路径 -->

<name>dfs.namenode.name.dir</name>

<value>/usr/local/src/hadoop/tmp/dfs/name</value>

</property>

<property>

<!-- 设置DataNode存放的路径 -->

<name>dfs.datanode.data.dir</name>

<value>/usr/local/src/hadoop/tmp/dfs/data</value>

</property>

</configuration>

8.3 修改 yarn-site.xml

vim /usr/local/src/hadoop/etc/hadoop/yarn-site.xml

<configuration>

<property>

<!-- 启用ResourceManager的HA功能 -->

<name>yarn.resourcemanager.ha.enabled</name>

<value>true</value>

</property>

<property>

<!-- 开启ResourceManager失败自动切换 -->

<name>yarn.resourcemanager.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<property>

<!-- 给ResourceManager HA集群命名id -->

<name>yarn.resourcemanager.cluster-id</name>

<value>yarn-cluster</value>

</property>

<property>

<!-- 指定ResourceManager HA有哪些节点 -->

<name>yarn.resourcemanager.ha.rm-ids</name>

<value>rm1,rm2</value>

</property>

<property>

<!-- 指定第一个节点在那一台机器 -->

<name>yarn.resourcemanager.hostname.rm1</name>

<value>master</value>

</property>

<property>

<!-- 指定第二个节点在那一台机器 -->

<name>yarn.resourcemanager.hostname.rm2</name>

<value>slave1</value>

</property>

<property>

<!-- 指定ResourceManager HA所用的Zookeeper节点 -->

<name>yarn.resourcemanager.zk-address</name>

<value>master:2181,slave1:2181,slave2:2181</value>

</property>

<property>

<!-- 启用RM重启的功能,默认为false -->

<name>yarn.resourcemanager.recovery.enabled</name>

<value>true</value>

</property>

<property>

<!-- 用于状态存储的类 -->

<name>yarn.resourcemanager.store.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore</value>

</property>

<property>

<!-- NodeManager启用server的方式 -->

<name>yarn-nodemanager.aux-services</name>

<value>mapreduce_shuffle,spark_shuffle</value>

</property>

<property>

<!-- NodeManager启用server使用算法的类 -->

<name>yarn-nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.spark_shuffle.class</name>

<value>org.apache.spark.network.yarn.YarnShuffleService</value>

</property>

<property>

<!-- 启用日志聚合功能 -->

<name>yarn.log-aggregation-enable</name>

<value>true</value>

</property>

<property>

<!-- 聚集的日志在HDFS上保存最长的时间 -->

<name>yarn.log-aggregation.retain-seconds</name>

<value>106800</value>

</property>

<property>

<!-- 聚集的日志在HDFS上保存最长的时间 -->

<name>yarn.nodemanager.remote-app-log-dir</name>

<value>/usr/local/src/hadoop/logs</value>

</property>

<property>

<!-- ApplicationMaster通过该地址向RM申请资源、释放资源,

本设置是ResourceManager对ApplicationMaster暴露的地址 -->

<name>yarn.resourcemanager.scheduler.address.rm1</name>

<value>master:8030</value>

</property>

<property>

<!-- NodeManager通过该地址交换信息 -->

<name>yarn.resourcemanager.resource-tracker.address.rm1</name>

<value>master:8031</value>

</property>

<property>

<!-- 客户机通过该地址向RM提交对应应用程序操作 -->

<name>yarn.resourcemanager.address.rm1</name>

<value>master:8032</value>

</property>

<property>

<!-- 管理员通过该地址向RM发送管理命令 -->

<name>yarn.resourcemanager.admin.address.rm1</name>

<value>master:8033</value>

</property>

<property>

<!-- RM HTTP访问地址,查看集群信息 -->

<name>yarn.resourcemanager.webapp.address.rm1</name>

<value>master:8088</value>

</property>

<property>

<!-- 同rm1对应内容 -->

<name>yarn.resourcemanager.scheduler.address.rm2</name>

<value>slave1:8030</value>

</property>

<property>

<!-- NodeManager通过该地址交换信息 -->

<name>yarn.resourcemanager.resource-tracker.address.rm2</name>

<value>slave1:8031</value>

</property>

<property>

<!-- 客户机通过该地址向RM提交对应应用程序操作 -->

<name>yarn.resourcemanager.address.rm2</name>

<value>slave1:8032</value>

</property>

<property>

<!-- 管理员通过该地址向RM发送管理命令 -->

<name>yarn.resourcemanager.admin.address.rm2</name>

<value>slave1:8033</value>

</property>

<property>

<!-- RM HTTP访问地址,查看集群信息 -->

<name>yarn.resourcemanager.webapp.address.rm2</name>

<value>slave1:8088</value>

</property>

</configuration>

8.4 修改 mapred-site.xml

vim /usr/local/src/hadoop/etc/hadoop/mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.jobhistory.address</name>

<value>slave2:10020</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>slave2:19888</value>

</property>

<property>

<name>mapreduce.jobhistory.intermediate-done-dir</name>

<value>/usr/local/src/hadoop/tmp/mr-history/tmp</value>

</property>

<property>

<name>mapreduce.jobhistory.done-dir</name>

<value>/usr/local/src/hadoop/tmp/mr-history/done</value>

</property>

</configuration>

8.5 修改 workers

vim /usr/local/src/hadoop/etc/hadoop/workers

master

slave1

slave2

注意:

在 Hadoop3.0 以上的版本,使用的是 workers 配置文件,而在 Hadoop3.0 以下,使用的是 slaves 配置文件

九、同步节点

xsync /usr/local/src/hadoop

xsync /usr/local/src/java

xsync /etc/profile

source /etc/profile

十、格式化及启动 Hadoop

10.1 启动journalnode节点

hadoop-daemon.sh start journalnode #三台机器都要执行该命令

10.2 格式化namenode

hdfs namenode -format #格式化HDFS

hdfs zkfc -formatZK #格式化Zookeeper

10.3 启动/停止集群命令(建议使用之前配置好的脚本)

hd脚本启动

hd start

开启全部节点(启动HDFS和YARN)

start-all.sh #启动HDFS和YARN

启动Zookeeper

hadoop-daemon.sh start zkfc #三台节点都要执行

启动HistoryServer节点(在slave2节点上执行)

mr-jobhistory-daemon.sh start historyserver

单独启动HDFS和YARN

start-hdfs.sh #启动HDFS

start-yarn.sh #启动YARN

验证(查看是否启动成功)

jps