1. 说明

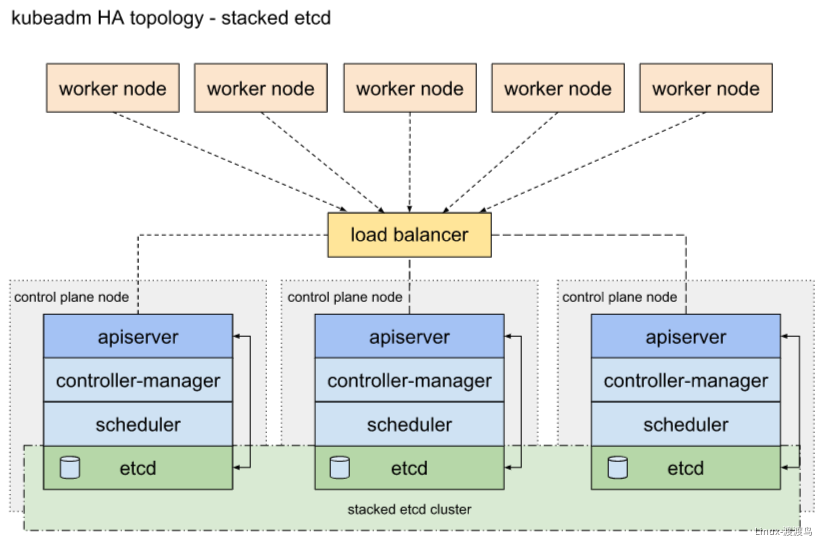

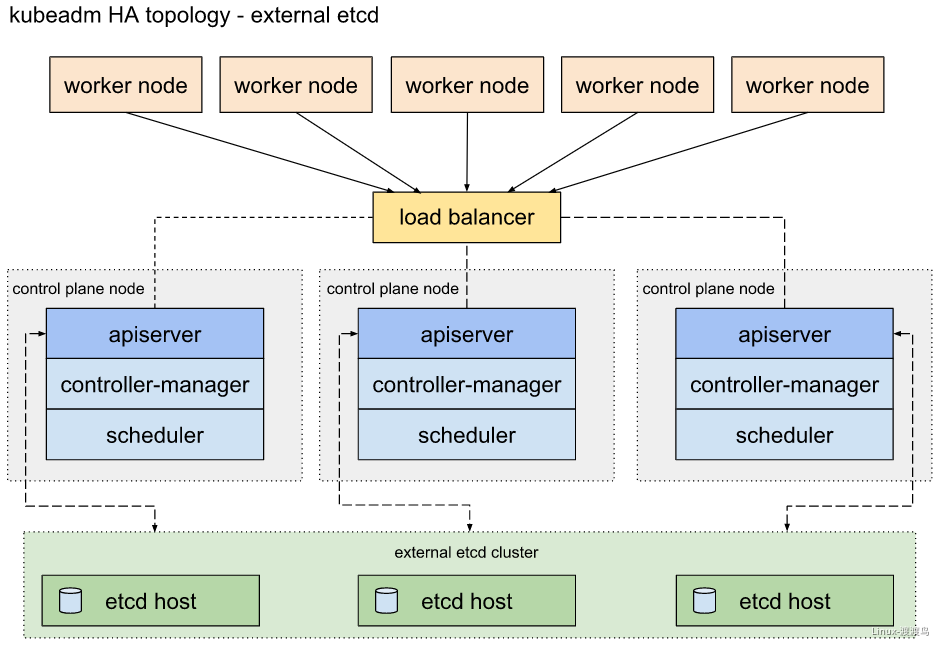

Kubeadm是一种常用的kubernetes集群安装方式,可以在生产环境中安装高可用集群,需要注意的是,这种方式安装的集群证书有效期只有一年,需要定期轮换。使用kubeadm部署高可用集群的时候,etcd集群可以放到master节点上,也可以使用外部的etcd集群。前者节约资源,方便管理,后者可用性更高:

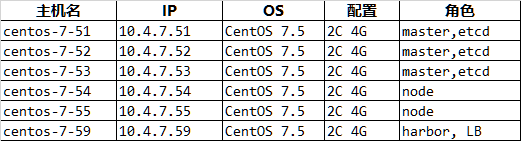

1.1. 节点配置

1.2. 检查项目

以下是安装集群之前必须要进行的检查项,避免安装失败!

1.2.1. 经验swap分区

[root@duduniao ~]# scan_host.sh cmd -h 10.4.7.51 10.4.7.52 10.4.7.53 10.4.7.54 10.4.7.55 "swapoff -a"[root@duduniao ~]# scan_host.sh cmd -h 10.4.7.51 10.4.7.52 10.4.7.53 10.4.7.54 10.4.7.55 "sed -i '/swap/s/^/#/' /etc/fstab"

1.2.2. 确保mac地址唯一

[root@duduniao ~]# scan_host.sh cmd -h 10.4.7.51 10.4.7.52 10.4.7.53 10.4.7.54 10.4.7.55 "ifconfig ens32 | grep ether | awk '{print \$2}'"|xargs -n 2

10.4.7.53 00:0c:29:54:d0:72

10.4.7.51 00:0c:29:6b:45:04

10.4.7.52 00:0c:29:cb:13:af

10.4.7.55 00:0c:29:9e:79:38

10.4.7.54 00:0c:29:de:32:40

1.2.3. 确保product_uuid唯一

[root@duduniao ~]# scan_host.sh cmd -h 10.4.7.51 10.4.7.52 10.4.7.53 10.4.7.54 10.4.7.55 "cat /sys/class/dmi/id/product_uuid"|xargs -n 2

10.4.7.52 F7714D56-DB80-A3B5-90A3-430EC8CB13AF

10.4.7.51 4E4F4D56-B05B-E126-ADF4-957BCF6B4504

10.4.7.55 60714D56-029B-C734-16B1-15939A9E7938

10.4.7.54 A0554D56-4083-5FB4-96F0-009C94DE3240

10.4.7.53 84CC4D56-9BED-62CD-D826-04336B54D072

1.2.4. 关闭selinux

[root@duduniao ~]# scan_host.sh cmd -h 10.4.7.51 10.4.7.52 10.4.7.53 10.4.7.54 10.4.7.55 "setenforce 0; sed -ri '/^SELINUX=/s/SELINUX=.+/SELINUX=disabled/' /etc/selinux/config"

[root@duduniao ~]# scan_host.sh cmd -h 10.4.7.51 10.4.7.52 10.4.7.53 10.4.7.54 10.4.7.55 "grep '^SELINUX=' /etc/selinux/config" | xargs -n 2

10.4.7.54 SELINUX=disabled

10.4.7.52 SELINUX=disabled

10.4.7.51 SELINUX=disabled

10.4.7.53 SELINUX=disabled

10.4.7.55 SELINUX=disabled

1.2.5. 启用br_netfilter模块

[root@duduniao ~]# scan_host.sh cmd -h 10.4.7.51 10.4.7.52 10.4.7.53 10.4.7.54 10.4.7.55 "modprobe br_netfilter"

1.2.6. 配置内核参数

部分centos7用户会碰到的iptable被绕过,没法路由到正确的IP地址上,需要配置以下系统参数

[root@duduniao ~]# scan_host.sh cmd -h 10.4.7.51 10.4.7.52 10.4.7.53 10.4.7.54 10.4.7.55 "echo -e 'net.bridge.bridge-nf-call-ip6tables = 1\nnet.bridge.bridge-nf-call-iptables = 1' > /etc/sysctl.d/k8s.conf "

[root@duduniao ~]# scan_host.sh cmd -h 10.4.7.51 10.4.7.52 10.4.7.53 10.4.7.54 10.4.7.55 "sysctl --system"

1.2.7. 安装时间同步服务

[root@duduniao ~]# scan_host.sh cmd -h 10.4.7.51 10.4.7.52 10.4.7.53 10.4.7.54 10.4.7.55 "yum install -y chrony"

[root@duduniao ~]# scan_host.sh push -h 10.4.7.51 10.4.7.52 10.4.7.53 10.4.7.54 10.4.7.55 chrony.conf /etc/

10.4.7.54 chrony.conf --> /etc/ Y

10.4.7.52 chrony.conf --> /etc/ Y

10.4.7.53 chrony.conf --> /etc/ Y

10.4.7.55 chrony.conf --> /etc/ Y

10.4.7.51 chrony.conf --> /etc/ Y

[root@duduniao ~]# scan_host.sh cmd -h 10.4.7.51 10.4.7.52 10.4.7.53 10.4.7.54 10.4.7.55 "systemctl start chronyd ; systemctl enable chronyd"

[root@centos-7-51 ~]# vim /etc/chrony.conf # 参考修改时间服务器地址即可(https://help.aliyun.com/document_detail/92704.html)

# Use public servers from the pool.ntp.org project.

# Please consider joining the pool (http://www.pool.ntp.org/join.html).

server ntp1.aliyun.com iburst

server ntp2.aliyun.com iburst

server ntp3.aliyun.com iburst

server ntp4.aliyun.com iburst

1.2.8. 启用ipvs模块

参考文档: https://github.com/kubernetes/kubernetes/blob/master/pkg/proxy/ipvs/README.md

检查内核版本,如果内核版本超过4.19,则需要安装 nf_conntrack 而不是 nf_conntrack_ipv4

# 检查ipvs模块是否已经编译到内核中,如果没有,则需要手动加载

[root@duduniao ~]# scan_host.sh cmd -h 10.4.7.51 10.4.7.52 10.4.7.53 10.4.7.54 10.4.7.55 "grep -e ipvs -e nf_conntrack_ipv4 /lib/modules/\$(uname -r)/modules.builtin"

# 载入ipvs模块

[root@duduniao ~]# scan_host.sh cmd -h 10.4.7.51 10.4.7.52 10.4.7.53 10.4.7.54 10.4.7.55 "modprobe -- ip_vs;modprobe -- ip_vs_rr;modprobe -- ip_vs_wrr;modprobe -- ip_vs_sh;modprobe -- nf_conntrack_ipv4"

# 检查ipvs模块

[root@duduniao ~]# scan_host.sh cmd -h 10.4.7.51 10.4.7.52 10.4.7.53 10.4.7.54 10.4.7.55 -f 1 "cut -f1 -d ' ' /proc/modules | grep -e ip_vs -e nf_conntrack_ipv4"

# 安装ipset 和 ipvsadm

[root@duduniao ~]# scan_host.sh cmd -h 10.4.7.51 10.4.7.52 10.4.7.53 10.4.7.54 10.4.7.55 -f 1 "yum install -y ipset ipvsadm"

# 设置重启机器时保证ipvs模块启用

[root@duduniao ~]# cat containerd.service # 修改containerd模块启动脚本,让其先加载ipvs模块,这种方式是我测试多次后发现比较有效的

# Copyright 2018-2020 Docker Inc.

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

# http://www.apache.org/licenses/LICENSE-2.0

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

[Unit]

Description=containerd container runtime

Documentation=https://containerd.io

After=network.target

[Service]

ExecStartPre=-/sbin/modprobe overlay

ExecStartPre=-/sbin/modprobe ip_vs

ExecStartPre=-/sbin/modprobe ip_vs_rr

ExecStartPre=-/sbin/modprobe ip_vs_wrr

ExecStartPre=-/sbin/modprobe ip_vs_sh

ExecStartPre=-/sbin/modprobe nf_conntrack_ipv4

ExecStart=/usr/bin/containerd

KillMode=process

Delegate=yes

LimitNOFILE=1048576

# Having non-zero Limit*s causes performance problems due to accounting overhead

# in the kernel. We recommend using cgroups to do container-local accounting.

LimitNPROC=infinity

LimitCORE=infinity

TasksMax=infinity

[Install]

WantedBy=multi-user.target

[root@duduniao ~]# scan_host.sh push -h 10.4.7.51 10.4.7.52 10.4.7.53 10.4.7.54 10.4.7.55 containerd.service /usr/lib/systemd/system/containerd.service

10.4.7.52 containerd.service --> /usr/lib/systemd/system/containerd.service Y

10.4.7.51 containerd.service --> /usr/lib/systemd/system/containerd.service Y

10.4.7.54 containerd.service --> /usr/lib/systemd/system/containerd.service Y

10.4.7.55 containerd.service --> /usr/lib/systemd/system/containerd.service Y

10.4.7.53 containerd.service --> /usr/lib/systemd/system/containerd.service Y

10.4.7.56 containerd.service --> /usr/lib/systemd/system/containerd.service Y

[root@duduniao ~]# scan_host.sh cmd -h 10.4.7.51 10.4.7.52 10.4.7.53 10.4.7.54 10.4.7.55 "systemctl daemon-reload"

2. 安装集群

2.1. 安装LB

[root@centos-7-59 ~]# wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

[root@centos-7-59 ~]# yum install -y nginx

[root@centos-7-59 ~]# vim /etc/nginx/nginx.conf # 末尾追加

...

stream {

log_format proxy '$time_local|$remote_addr|$upstream_addr|$protocol|$status|'

'$session_time|$upstream_connect_time|$bytes_sent|$bytes_received|'

'$upstream_bytes_sent|$upstream_bytes_received' ;

upstream kube-apiserver {

server 10.4.7.51:6443 ;

server 10.4.7.52:6443 ;

server 10.4.7.53:6443 ;

}

server {

listen 10.4.7.59:6443 backlog=65535 so_keepalive=on;

allow 10.4.7.0/24;

allow 192.168.0.0/16;

allow 172.19.0.0/16;

allow 172.24.0.0/16;

deny all;

proxy_connect_timeout 3s;

proxy_next_upstream on;

proxy_next_upstream_timeout 5;

proxy_next_upstream_tries 1;

proxy_pass kube-apiserver;

access_log /var/log/nginx/kube-apiserver.log proxy;

}

}

[root@centos-7-59 ~]# systemctl start nginx.service ; systemctl enable nginx.service

2.2. 安装docker-ce

[root@duduniao ~]# scan_host.sh cmd -h 10.4.7.51 10.4.7.52 10.4.7.53 10.4.7.54 10.4.7.55 "wget -O /etc/yum.repos.d/docker-ce.repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo"

[root@duduniao ~]# scan_host.sh cmd -h 10.4.7.51 10.4.7.52 10.4.7.53 10.4.7.54 10.4.7.55 "yum install -y containerd.io-1.2.13 docker-ce-19.03.11 docker-ce-cli-19.03.11"

[root@duduniao ~]# scan_host.sh cmd -h 10.4.7.51 10.4.7.52 10.4.7.53 10.4.7.54 10.4.7.55 "mkdir /etc/docker"

[root@duduniao ~]# cat daemon.json

{

"graph": "/data/docker",

"storage-driver": "overlay2",

"insecure-registries": ["registry.access.redhat.com","quay.io"],

"registry-mirrors": ["https://q2gr04ke.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-opts": {"max-size":"32M", "max-file":"2"}

}

[root@duduniao ~]# scan_host.sh push -h 10.4.7.51 10.4.7.52 10.4.7.53 10.4.7.54 10.4.7.55 daemon.json /etc/docker/

10.4.7.55 daemon.json --> /etc/docker/ Y

10.4.7.51 daemon.json --> /etc/docker/ Y

10.4.7.52 daemon.json --> /etc/docker/ Y

10.4.7.54 daemon.json --> /etc/docker/ Y

10.4.7.53 daemon.json --> /etc/docker/ Y

[root@duduniao ~]# scan_host.sh cmd -h 10.4.7.51 10.4.7.52 10.4.7.53 10.4.7.54 10.4.7.55 "systemctl restart docker"

[root@duduniao ~]# scan_host.sh cmd -h 10.4.7.51 10.4.7.52 10.4.7.53 10.4.7.54 10.4.7.55 "systemctl enable docker"

2.3. 安装k8s集群

2.3.1. 安装kubeadm

[root@duduniao ~]# cat kubeadm.repo # 配置阿里的Yum源

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

[root@duduniao ~]# scan_host.sh push -h 10.4.7.51 10.4.7.52 10.4.7.53 10.4.7.54 10.4.7.55 kubeadm.repo /etc/yum.repos.d/

10.4.7.53 kubeadm.repo --> /etc/yum.repos.d/ Y

10.4.7.52 kubeadm.repo --> /etc/yum.repos.d/ Y

10.4.7.54 kubeadm.repo --> /etc/yum.repos.d/ Y

10.4.7.51 kubeadm.repo --> /etc/yum.repos.d/ Y

10.4.7.55 kubeadm.repo --> /etc/yum.repos.d/ Y

[root@centos-7-55 ~]# yum list kubelet --showduplicates | grep 1.17 # 1.17 版本支持docker 1903,在github的中有说明

kubelet.x86_64 1.17.0-0 kubernetes

kubelet.x86_64 1.17.1-0 kubernetes

kubelet.x86_64 1.17.2-0 kubernetes

kubelet.x86_64 1.17.3-0 kubernetes

kubelet.x86_64 1.17.4-0 kubernetes

kubelet.x86_64 1.17.5-0 kubernetes

kubelet.x86_64 1.17.6-0 kubernetes

kubelet.x86_64 1.17.7-0 kubernetes

kubelet.x86_64 1.17.7-1 kubernetes

kubelet.x86_64 1.17.8-0 kubernetes

kubelet.x86_64 1.17.9-0 kubernetes

kubelet.x86_64 1.17.11-0 kubernetes

kubelet.x86_64 1.17.12-0 kubernetes

kubelet.x86_64 1.17.13-0 kubernetes

kubelet.x86_64 1.17.14-0 kubernetes

# node节点其实不需要安装kubectl的命令行工具

[root@duduniao ~]# scan_host.sh cmd -h 10.4.7.51 10.4.7.52 10.4.7.53 10.4.7.54 10.4.7.55 "yum install -y kubeadm-1.17.14 kubelet-1.17.14 kubectl-1.17.14"

[root@duduniao ~]# scan_host.sh cmd -h 10.4.7.51 10.4.7.52 10.4.7.53 10.4.7.54 10.4.7.55 "systemctl start kubelet.service; systemctl enable kubelet.service"

2.3.2. 初始化控制平面

选择一台master进行初始化操作:

# 仔细阅读输出的信息,尤其是如何扩展节点和kubectl使用

[root@centos-7-51 ~]# kubeadm init --control-plane-endpoint "10.4.7.59:6443" --pod-network-cidr 172.16.0.0/16 --service-cidr 10.96.0.0/16 --image-repository registry.aliyuncs.com/google_containers --upload-cert

I1204 21:49:05.949552 15589 version.go:251] remote version is much newer: v1.19.4; falling back to: stable-1.17

W1204 21:49:08.043867 15589 validation.go:28] Cannot validate kube-proxy config - no validator is available

W1204 21:49:08.043888 15589 validation.go:28] Cannot validate kubelet config - no validator is available

[init] Using Kubernetes version: v1.17.14

[preflight] Running pre-flight checks

[WARNING Hostname]: hostname "centos-7-51" could not be reached

[WARNING Hostname]: hostname "centos-7-51": lookup centos-7-51 on 10.4.7.254:53: no such host

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [centos-7-51 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 10.4.7.51 10.4.7.59]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [centos-7-51 localhost] and IPs [10.4.7.51 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [centos-7-51 localhost] and IPs [10.4.7.51 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

W1204 21:49:11.018264 15589 manifests.go:214] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC"

[control-plane] Creating static Pod manifest for "kube-scheduler"

W1204 21:49:11.019029 15589 manifests.go:214] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 31.035114 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.17" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

aeca2ae59ec761196f824a869b5ac0c4dd1cc24bfd93a2d81671f9d570a0357e

[mark-control-plane] Marking the node centos-7-51 as control-plane by adding the label "node-role.kubernetes.io/master=''"

[mark-control-plane] Marking the node centos-7-51 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: j8eck0.u5vu8pxb63eq9bxb

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join 10.4.7.59:6443 --token j8eck0.u5vu8pxb63eq9bxb \

--discovery-token-ca-cert-hash sha256:6cdf0e0c66e095beca8b7eadb74da19aa904b45e2378bf53172c7f633c0dc9e8 \

--control-plane --certificate-key aeca2ae59ec761196f824a869b5ac0c4dd1cc24bfd93a2d81671f9d570a0357e

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 10.4.7.59:6443 --token j8eck0.u5vu8pxb63eq9bxb \

--discovery-token-ca-cert-hash sha256:6cdf0e0c66e095beca8b7eadb74da19aa904b45e2378bf53172c7f633c0dc9e8

2.3.3. 追加其它控制平面

将其它的master节点添加到集群中

[root@centos-7-52 ~]# kubeadm join 10.4.7.59:6443 --token j8eck0.u5vu8pxb63eq9bxb --discovery-token-ca-cert-hash sha256:6cdf0e0c66e095beca8b7eadb74da19aa904b45e2378bf53172c7f633c0dc9e8 --control-plane --certificate-key aeca2ae59ec761196f824a869b5ac0c4dd1cc24bfd93a2d81671f9d570a0357e

[root@centos-7-53 ~]# kubeadm join 10.4.7.59:6443 --token j8eck0.u5vu8pxb63eq9bxb --discovery-token-ca-cert-hash sha256:6cdf0e0c66e095beca8b7eadb74da19aa904b45e2378bf53172c7f633c0dc9e8 --control-plane --certificate-key aeca2ae59ec761196f824a869b5ac0c4dd1cc24bfd93a2d81671f9d570a0357e

2.3.4. 追加其它Node节点

[root@centos-7-54 ~]# kubeadm join 10.4.7.59:6443 --token j8eck0.u5vu8pxb63eq9bxb --discovery-token-ca-cert-hash sha256:6cdf0e0c66e095beca8b7eadb74da19aa904b45e2378bf53172c7f633c0dc9e8

[root@centos-7-55 ~]# kubeadm join 10.4.7.59:6443 --token j8eck0.u5vu8pxb63eq9bxb --discovery-token-ca-cert-hash sha256:6cdf0e0c66e095beca8b7eadb74da19aa904b45e2378bf53172c7f633c0dc9e8

2.3.5. 配置kubeconfig文件

在master节点根据提示操作:

[root@centos-7-51 ~]# mkdir -p $HOME/.kube

[root@centos-7-51 ~]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@centos-7-51 ~]# chown $(id -u):$(id -g) $HOME/.kube/config

2.3.6. 解决组件报错问题

[root@centos-7-51 ~]# kubectl get cs # 解决controller-manager和scheduler不健康问题

NAME STATUS MESSAGE ERROR

controller-manager Unhealthy Get http://127.0.0.1:10252/healthz: dial tcp 127.0.0.1:10252: connect: connection refused

scheduler Unhealthy Get http://127.0.0.1:10251/healthz: dial tcp 127.0.0.1:10251: connect: connection refused

etcd-0 Healthy {"health":"true"}

以下是百度到的方案,没有对此做深入的研究,理应由其它更优雅的解决方案。除了kubelet之外,其它组件时通过裸pod的方式运行,配置文件在 /etc/kubernetes/manifests 内,修改该配置文件后会动态生效。将 kube-scheduler.yaml 和 kube-controller-manager.yaml 容器启动参数中 —port=0去掉。该—port=0表示禁止使用非安全的http接口,同时 --bind-address 非安全的绑定地址参数失效。

[root@centos-7-51 ~]# vim /etc/kubernetes/manifests/kube-scheduler.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

component: kube-scheduler

tier: control-plane

name: kube-scheduler

namespace: kube-system

spec:

containers:

- command:

- kube-scheduler

- --authentication-kubeconfig=/etc/kubernetes/scheduler.conf

- --authorization-kubeconfig=/etc/kubernetes/scheduler.conf

- --bind-address=127.0.0.1

- --kubeconfig=/etc/kubernetes/scheduler.conf

- --leader-elect=true

# - --port=0

image: registry.aliyuncs.com/google_containers/kube-scheduler:v1.17.14

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 8

...

[root@centos-7-51 ~]# kubectl get cs # 修改kube-scheduler.yaml和kube-controller-manager.yaml后

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health":"true"}

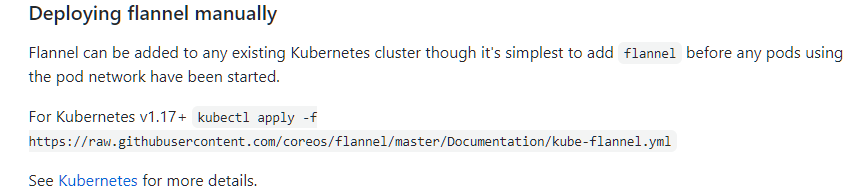

2.3.7. 安装CNI插件

为了方便,此处选择安装Flannel作为插件,github地址:https://github.com/coreos/flannel

安装指导: https://github.com/coreos/flannel/blob/master/Documentation/kubernetes.md

# 以下节点处于not ready状态,并且coredns处于pending状态,原因是缺少CNI插件

[root@centos-7-51 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

centos-7-51 NotReady master 7m22s v1.17.14

centos-7-52 NotReady master 5m16s v1.17.14

centos-7-53 NotReady master 4m26s v1.17.14

centos-7-54 NotReady <none> 3m v1.17.14

centos-7-55 NotReady <none> 2m57s v1.17.14

[root@centos-7-51 ~]# kubectl get pod -A | grep Pending

kube-system coredns-9d85f5447-6wh25 0/1 Pending 0 7m49s

kube-system coredns-9d85f5447-klsxf 0/1 Pending 0 7m49s

# 此处需要对flannel中yaml文件就行修改:

# 1. 镜像地址修改

# 2. pod的CIDR修改

---

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp.flannel.unprivileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:

privileged: false

volumes:

- configMap

- secret

- emptyDir

- hostPath

allowedHostPaths:

- pathPrefix: "/etc/cni/net.d"

- pathPrefix: "/etc/kube-flannel"

- pathPrefix: "/run/flannel"

readOnlyRootFilesystem: false

# Users and groups

runAsUser:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

# Privilege Escalation

allowPrivilegeEscalation: false

defaultAllowPrivilegeEscalation: false

# Capabilities

allowedCapabilities: ['NET_ADMIN', 'NET_RAW']

defaultAddCapabilities: []

requiredDropCapabilities: []

# Host namespaces

hostPID: false

hostIPC: false

hostNetwork: true

hostPorts:

- min: 0

max: 65535

# SELinux

seLinux:

# SELinux is unused in CaaSP

rule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

rules:

- apiGroups: ['extensions']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames: ['psp.flannel.unprivileged']

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "172.16.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

# image: quay.io/coreos/flannel:v0.13.1-rc1

image: quay.mirrors.ustc.edu.cn/coreos/flannel:v0.13.1-rc1

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

# image: quay.io/coreos/flannel:v0.13.1-rc1

image: quay.mirrors.ustc.edu.cn/coreos/flannel:v0.13.1-rc1

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

[root@centos-7-51 ~]# kubectl apply -f /tmp/flannel.yaml # flannel.yaml内容就是上述的yaml

[root@centos-7-51 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

centos-7-51 Ready master 86m v1.17.14

centos-7-52 Ready master 84m v1.17.14

centos-7-53 Ready master 83m v1.17.14

centos-7-54 Ready <none> 82m v1.17.14

centos-7-55 Ready <none> 82m v1.17.14

[root@centos-7-51 ~]# kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"}

[root@centos-7-51 ~]# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-9d85f5447-6wh25 1/1 Running 1 86m

kube-system coredns-9d85f5447-klsxf 1/1 Running 0 86m

kube-system etcd-centos-7-51 1/1 Running 0 86m

kube-system etcd-centos-7-52 1/1 Running 0 84m

kube-system etcd-centos-7-53 1/1 Running 0 83m

kube-system kube-apiserver-centos-7-51 1/1 Running 0 86m

kube-system kube-apiserver-centos-7-52 1/1 Running 0 84m

kube-system kube-apiserver-centos-7-53 1/1 Running 1 82m

kube-system kube-controller-manager-centos-7-51 1/1 Running 0 81m

kube-system kube-controller-manager-centos-7-52 1/1 Running 0 81m

kube-system kube-controller-manager-centos-7-53 1/1 Running 0 80m

kube-system kube-flannel-ds-c2bs6 1/1 Running 0 10m

kube-system kube-flannel-ds-t6j77 1/1 Running 0 10m

kube-system kube-flannel-ds-w526w 1/1 Running 0 10m

kube-system kube-flannel-ds-wp546 1/1 Running 0 10m

kube-system kube-flannel-ds-xc5cb 1/1 Running 0 10m

kube-system kube-proxy-56ll2 1/1 Running 1 82m

kube-system kube-proxy-5rl4c 1/1 Running 0 84m

kube-system kube-proxy-62rb9 1/1 Running 0 86m

kube-system kube-proxy-bjzlv 1/1 Running 0 83m

kube-system kube-proxy-llrjg 1/1 Running 0 82m

kube-system kube-scheduler-centos-7-51 1/1 Running 0 81m

kube-system kube-scheduler-centos-7-52 1/1 Running 0 81m

kube-system kube-scheduler-centos-7-53 1/1 Running 0 80m

2.3.8. kube-proxy修改

kube-proxy默认采用了 iptables 模式,如果需要需改为ipvs,则修改其configmap,并杀死kube-proxy的pod,让其重启。

[root@centos-7-51 ~]# kubectl get cm -n kube-system kube-proxy -o yaml

apiVersion: v1

data:

config.conf: |-

apiVersion: kubeproxy.config.k8s.io/v1alpha1

......

mode: "ipvs" # 修改为 ipvs即可

查看当前使用的模式则,两种方式,通过 ipvsadm -L 查看是否有ipvs转发规则,也可以通过日志查看

[root@centos-7-51 ~]# kubectl logs -f -n kube-system kube-proxy-49s6m

I1205 12:14:08.430165 1 node.go:135] Successfully retrieved node IP: 10.4.7.55

I1205 12:14:08.430391 1 server_others.go:172] Using ipvs Proxier.

W1205 12:14:08.431429 1 proxier.go:420] IPVS scheduler not specified, use rr by default

2.4. 安装插件

在上述步骤中,已经将集群搭建完毕,但是该集群缺少很多插件,无法在生产环境使用。接下来将逐个补全相关插件。

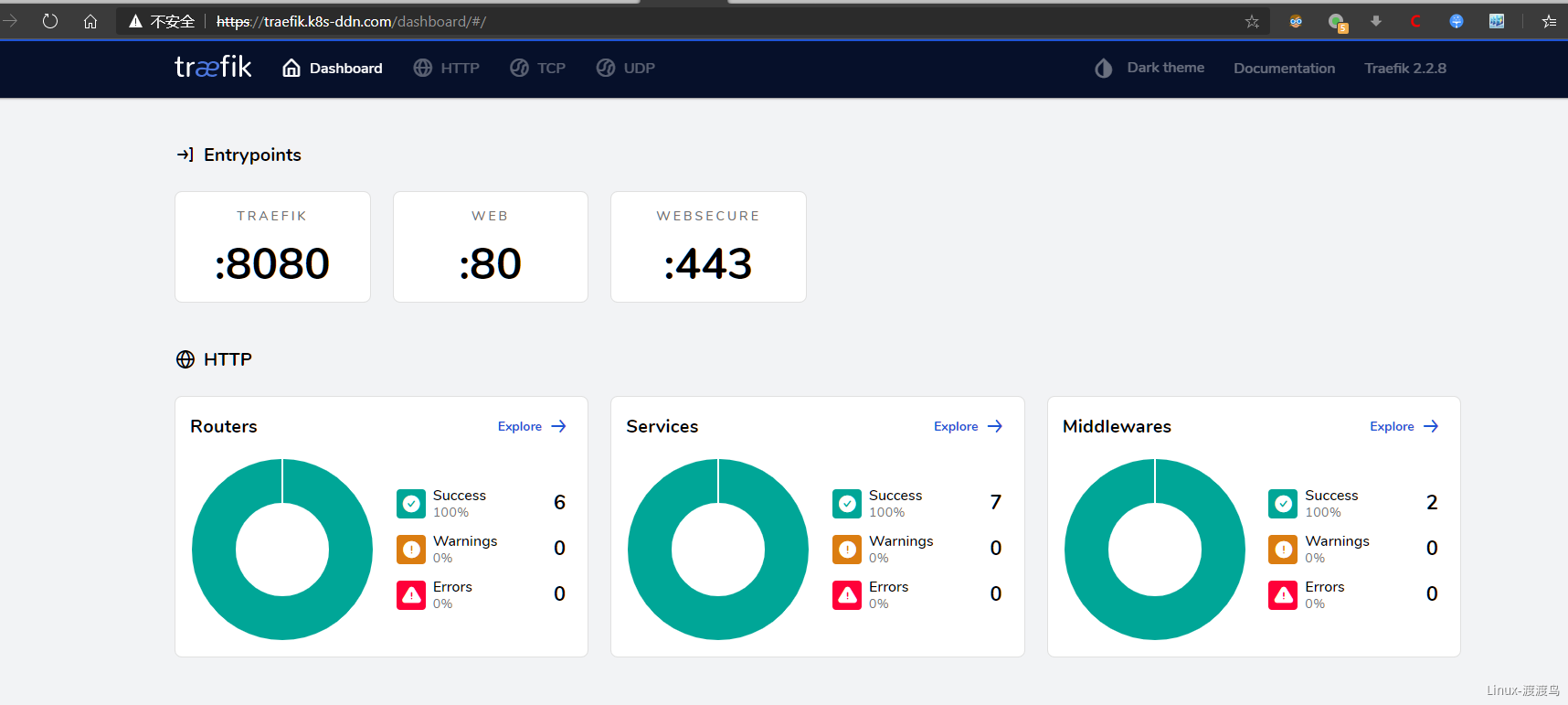

2.4.1. traefik安装

此处演示使用 traefik 2.2 来作为 ingress controller,traefik 在2.x版本支持CRD方式定义路由,因此需要创建CRD资源

---

## IngressRoute

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: ingressroutes.traefik.containo.us

spec:

scope: Namespaced

group: traefik.containo.us

version: v1alpha1

names:

kind: IngressRoute

plural: ingressroutes

singular: ingressroute

---

## IngressRouteTCP

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: ingressroutetcps.traefik.containo.us

spec:

scope: Namespaced

group: traefik.containo.us

version: v1alpha1

names:

kind: IngressRouteTCP

plural: ingressroutetcps

singular: ingressroutetcp

---

## Middleware

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: middlewares.traefik.containo.us

spec:

scope: Namespaced

group: traefik.containo.us

version: v1alpha1

names:

kind: Middleware

plural: middlewares

singular: middleware

---

## TLSOption

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: tlsoptions.traefik.containo.us

spec:

scope: Namespaced

group: traefik.containo.us

version: v1alpha1

names:

kind: TLSOption

plural: tlsoptions

singular: tlsoption

---

## TraefikService

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: traefikservices.traefik.containo.us

spec:

scope: Namespaced

group: traefik.containo.us

version: v1alpha1

names:

kind: TraefikService

plural: traefikservices

singular: traefikservice

---

## TLSStore

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: tlsstores.traefik.containo.us

spec:

group: traefik.containo.us

version: v1alpha1

names:

kind: TLSStore

plural: tlsstores

singular: tlsstore

scope: Namespaced

---

## IngressRouteUDP

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: ingressrouteudps.traefik.containo.us

spec:

group: traefik.containo.us

version: v1alpha1

names:

kind: IngressRouteUDP

plural: ingressrouteudps

singular: ingressrouteudp

scope: Namespaced

---

## ServiceAccount

apiVersion: v1

kind: ServiceAccount

metadata:

namespace: kube-system

name: traefik-ingress-controller

---

## ClusterRole

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: traefik-ingress-controller

rules:

- apiGroups: [""]

resources: ["services","endpoints","secrets"]

verbs: ["get","list","watch"]

- apiGroups: ["extensions"]

resources: ["ingresses"]

verbs: ["get","list","watch"]

- apiGroups: ["extensions"]

resources: ["ingresses/status"]

verbs: ["update"]

- apiGroups: ["traefik.containo.us"]

resources: ["middlewares","ingressroutes","ingressroutetcps","tlsoptions","ingressrouteudps","traefikservices","tlsstores"]

verbs: ["get","list","watch"]

---

## ClusterRoleBinding

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: traefik-ingress-controller

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: traefik-ingress-controller

subjects:

- kind: ServiceAccount

name: traefik-ingress-controller

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: traefik-config

namespace: kube-system

data:

traefik.yaml: |-

ping: "" ## 启用 Ping

serversTransport:

insecureSkipVerify: true ## Traefik 忽略验证代理服务的 TLS 证书

api:

insecure: true ## 允许 HTTP 方式访问 API

dashboard: true ## 启用 Dashboard

debug: false ## 启用 Debug 调试模式

metrics:

prometheus: "" ## 配置 Prometheus 监控指标数据,并使用默认配置

entryPoints:

web:

address: ":80" ## 配置 80 端口,并设置入口名称为 web

websecure:

address: ":443" ## 配置 443 端口,并设置入口名称为 websecure

providers:

kubernetesCRD: "" ## 启用 Kubernetes CRD 方式来配置路由规则

kubernetesIngress: "" ## 启动 Kubernetes Ingress 方式来配置路由规则

log:

filePath: "" ## 设置调试日志文件存储路径,如果为空则输出到控制台

level: error ## 设置调试日志级别

format: json ## 设置调试日志格式

accessLog:

filePath: "" ## 设置访问日志文件存储路径,如果为空则输出到控制台

format: json ## 设置访问调试日志格式

bufferingSize: 0 ## 设置访问日志缓存行数

filters:

#statusCodes: ["200"] ## 设置只保留指定状态码范围内的访问日志

retryAttempts: true ## 设置代理访问重试失败时,保留访问日志

minDuration: 20 ## 设置保留请求时间超过指定持续时间的访问日志

fields: ## 设置访问日志中的字段是否保留(keep 保留、drop 不保留)

defaultMode: keep ## 设置默认保留访问日志字段

names: ## 针对访问日志特别字段特别配置保留模式

ClientUsername: drop

headers: ## 设置 Header 中字段是否保留

defaultMode: keep ## 设置默认保留 Header 中字段

names: ## 针对 Header 中特别字段特别配置保留模式

User-Agent: redact

Authorization: drop

Content-Type: keep

#tracing: ## 链路追踪配置,支持 zipkin、datadog、jaeger、instana、haystack 等

# serviceName: ## 设置服务名称(在链路追踪端收集后显示的服务名)

# zipkin: ## zipkin配置

# sameSpan: true ## 是否启用 Zipkin SameSpan RPC 类型追踪方式

# id128Bit: true ## 是否启用 Zipkin 128bit 的跟踪 ID

# sampleRate: 0.1 ## 设置链路日志采样率(可以配置0.0到1.0之间的值)

# httpEndpoint: http://localhost:9411/api/v2/spans ## 配置 Zipkin Server 端点

---

apiVersion: v1

kind: Service

metadata:

name: traefik

namespace: kube-system

spec:

ports:

- name: web

port: 80

- name: websecure

port: 443

- name: admin

port: 8080

selector:

app: traefik

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: traefik-ingress-controller

namespace: kube-system

labels:

app: traefik

spec:

selector:

matchLabels:

app: traefik

template:

metadata:

name: traefik

labels:

app: traefik

spec:

serviceAccountName: traefik-ingress-controller

terminationGracePeriodSeconds: 1

containers:

- image: traefik:v2.2.8

name: traefik-ingress-lb

ports:

- name: web

containerPort: 80

hostPort: 80 ## 将容器端口绑定所在服务器的 80 端口

- name: websecure

containerPort: 443

hostPort: 443 ## 将容器端口绑定所在服务器的 443 端口

- name: admin

containerPort: 8080 ## Traefik Dashboard 端口

resources:

limits:

cpu: 2000m

memory: 1024Mi

requests:

cpu: 1000m

memory: 1024Mi

securityContext:

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

args:

- --configfile=/config/traefik.yaml

volumeMounts:

- mountPath: "/config"

name: "config"

readinessProbe:

httpGet:

path: /ping

port: 8080

failureThreshold: 3

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 5

livenessProbe:

httpGet:

path: /ping

port: 8080

failureThreshold: 3

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 5

volumes:

- name: config

configMap:

name: traefik-config

# tolerations: ## 设置容忍所有污点,防止节点被设置污点

# - operator: "Exists"

# nodeSelector: ## 设置node筛选器,在特定label的节点上启动

# IngressProxy: "true"

---

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: traefik-dashboard-ingress

namespace: kube-system

annotations:

kubernetes.io/ingress.class: traefik

traefik.ingress.kubernetes.io/router.entrypoints: web

spec:

rules:

- host: traefik.k8s-ddn.com

http:

paths:

- path: /

backend:

serviceName: traefik

servicePort: 8080

[root@centos-7-51 ~]# kubectl apply -f /tmp/traefik.yaml # 应用traefik资源清单

# 一般场景中,不建议将https放入到k8s集群内部,这样会将SSL会话卸载工作交给了集群,并且可能需要cert-manager管理证书,增加负担

# 常规做法是在集群外面的SLB上卸载证书,SLB到ingress走http协议

# 此处配置了泛域名证书,泛域名证书制作教程: https://www.yuque.com/duduniao/nginx/smgh7e#T7Ys0

[root@centos-7-59 ~]# cat /etc/nginx/conf.d/k8s-app.conf

server {

server_name *.k8s-ddn.com;

listen 80;

rewrite ^(.*)$ https://${host}$1 permanent;

}

server {

listen 443 ssl;

server_name *.k8s-ddn.com;

ssl_certificate "certs/k8s-app.crt";

ssl_certificate_key "certs/k8s-app.key";

ssl_session_cache shared:SSL:1m;

ssl_session_timeout 10m;

ssl_ciphers HIGH:!aNULL:!MD5;

ssl_prefer_server_ciphers on;

location / {

proxy_pass http://k8s_http_pool;

proxy_set_header Host $http_host;

proxy_set_header x-forwarded-for $proxy_add_x_forwarded_for;

access_log /var/log/nginx/k8s.log proxy;

}

}

upstream k8s_http_pool {

server 10.4.7.55:80 max_fails=3 fail_timeout=10s;

server 10.4.7.54:80 max_fails=3 fail_timeout=10s;

}

# 暂时不使用https

upstream k8s_https_pool {

server 10.4.7.55:443 max_fails=3 fail_timeout=10s;

server 10.4.7.54:443 max_fails=3 fail_timeout=10s;

}

2.4.2. nfs-client-provisioner安装

Kubernetes 中支持动态申请PV和PVC的功能,但是nfs存储本身不支持这个功能,但是nfs存储又是非常常用的一种共享存储。NFS-Client Provisioner 使得nfs server具备对外提供动态PV的能力,官方主页: https://github.com/kubernetes-sigs/nfs-subdir-external-provisioner。生成的目录为: ${namespace}-${pvcName}-${pvName}

# 创建nfs server, 在 centos-7-59 上安装nfs server,并对外暴露 /data/nfs 目录

[root@centos-7-59 ~]# yum install -y nfs-utils

[root@centos-7-59 ~]# echo '/data/nfs *(rw,sync,no_wdelay,all_squash)' > /etc/exports

[root@centos-7-59 ~]# systemctl start nfs && systemctl enable nfs

[root@centos-7-59 ~]# showmount -e

Export list for centos-7-59:

/data/nfs *

---

apiVersion: v1

kind: Namespace

metadata:

name: storage

spec:

finalizers:

- kubernetes

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: storage

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: storage

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: storage

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: storage

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: storage

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

annotations:

# 指定为默认存储

storageclass.kubernetes.io/is-default-class: "true"

name: managed-nfs-storage

provisioner: fuseim.pri/ifs # or choose another name, must match deployment's env PROVISIONER_NAME'

parameters:

archiveOnDelete: "false"

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: storage

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: quay.mirrors.ustc.edu.cn/external_storage/nfs-client-provisioner:latest

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: fuseim.pri/ifs

# 指定nfs信息

- name: NFS_SERVER

value: 10.4.7.59

- name: NFS_PATH

value: /data/nfs

volumes:

- name: nfs-client-root

nfs:

# 指定nfs信息

server: 10.4.7.59

path: /data/nfs

# 部署nfs-client-provisioner之前,需要安装nfs-utils,因为当前集群仅node上部署应用,因此仅对两个Node安装nfs-utils

[root@duduniao ~]# scan_host.sh cmd -h 10.4.7.54 10.4.7.55 "yum install -y nfs-utils"

[root@centos-7-51 ~]# kubectl apply -f /tmp/nfs-client.yaml

[root@centos-7-51 ~]# kubectl get pod -n storage

NAME READY STATUS RESTARTS AGE

nfs-client-provisioner-5bdc64dd59-zf22c 1/1 Running 0 5m31s

# 启动一个pvc并验证

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: test-claim

annotations:

volume.beta.kubernetes.io/storage-class: "managed-nfs-storage"

spec:

storageClassName: managed-nfs-storage

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Mi

---

kind: Pod

apiVersion: v1

metadata:

name: test-pod

spec:

containers:

- name: test-pod

image: busybox:latest

command:

- "/bin/sh"

args:

- "-c"

- "touch /mnt/SUCCESS && exit 0 || exit 1"

volumeMounts:

- name: nfs-pvc

mountPath: "/mnt"

restartPolicy: "Never"

volumes:

- name: nfs-pvc

persistentVolumeClaim:

claimName: test-claim

[root@centos-7-51 ~]# kubectl apply -f /tmp/test-pvc.yaml

[root@centos-7-51 ~]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

test-claim Bound pvc-4ca7e0e6-c477-4420-84b0-c63b296cdbb9 1Mi RWX managed-nfs-storage 102s

# 注意nfs中路径,${namespace}-${pvcName}-${pvName}

[root@centos-7-59 ~]# ls /data/nfs/default-test-claim-pvc-4ca7e0e6-c477-4420-84b0-c63b296cdbb9/SUCCESS

/data/nfs/default-test-claim-pvc-4ca7e0e6-c477-4420-84b0-c63b296cdbb9/SUCCESS

2.4.3. metrics-server

在kubernetes中HPA自动伸缩指标依据、kubectl top 命令的资源使用率,可以通过 metrics-server 来获取。但是官方明确表示,该指标不应该用于监控指标采集。官方主页:https://github.com/kubernetes-sigs/metrics-server。在大部分情况下,使用deployment部署一个副本即可,最多支持5000个node,每个node消耗3m CPU 和 3M 内存。

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

rbac.authorization.k8s.io/aggregate-to-admin: "true"

rbac.authorization.k8s.io/aggregate-to-edit: "true"

rbac.authorization.k8s.io/aggregate-to-view: "true"

name: system:aggregated-metrics-reader

rules:

- apiGroups:

- metrics.k8s.io

resources:

- pods

- nodes

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

rules:

- apiGroups:

- ""

resources:

- pods

- nodes

- nodes/stats

- namespaces

- configmaps

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server-auth-reader

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: extension-apiserver-authentication-reader

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server:system:auth-delegator

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:auth-delegator

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:metrics-server

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

ports:

- name: https

port: 443

protocol: TCP

targetPort: https

selector:

k8s-app: metrics-server

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

selector:

matchLabels:

k8s-app: metrics-server

strategy:

rollingUpdate:

maxUnavailable: 0

template:

metadata:

labels:

k8s-app: metrics-server

spec:

containers:

- args:

- --cert-dir=/tmp

- --secure-port=4443

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

# 不加 --kubelet-insecure-tls,会报错: unable to fully scrape metrics from node centos-7-54: unable to fetch metrics from node centos-7-54: Get "https://10.4.7.54:10250/stats/summary?only_cpu_and_memory=true" xxx

- --kubelet-insecure-tls

# github上提供的镜像,需要翻墙,且当前版本没有在阿里云镜像站找到,因此使用docker hub 上的镜像替代

image: bitnami/metrics-server:0.4.1

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 3

httpGet:

path: /livez

port: https

scheme: HTTPS

periodSeconds: 10

name: metrics-server

ports:

- containerPort: 4443

name: https

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /readyz

port: https

scheme: HTTPS

periodSeconds: 10

securityContext:

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 1000

volumeMounts:

- mountPath: /tmp

name: tmp-dir

nodeSelector:

kubernetes.io/os: linux

priorityClassName: system-cluster-critical

serviceAccountName: metrics-server

volumes:

- emptyDir: {}

name: tmp-dir

---

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:

labels:

k8s-app: metrics-server

name: v1beta1.metrics.k8s.io

spec:

group: metrics.k8s.io

groupPriorityMinimum: 100

insecureSkipTLSVerify: true

service:

name: metrics-server

namespace: kube-system

version: v1beta1

versionPriority: 100

[root@centos-7-51 ~]# kubectl apply -f /tmp/kube-metrics-server.yaml

[root@centos-7-51 ~]# kubectl top node

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

centos-7-51 260m 13% 879Mi 23%

centos-7-52 136m 6% 697Mi 18%

centos-7-53 125m 6% 702Mi 19%

centos-7-54 58m 2% 532Mi 14%

centos-7-55 42m 2% 475Mi 12%

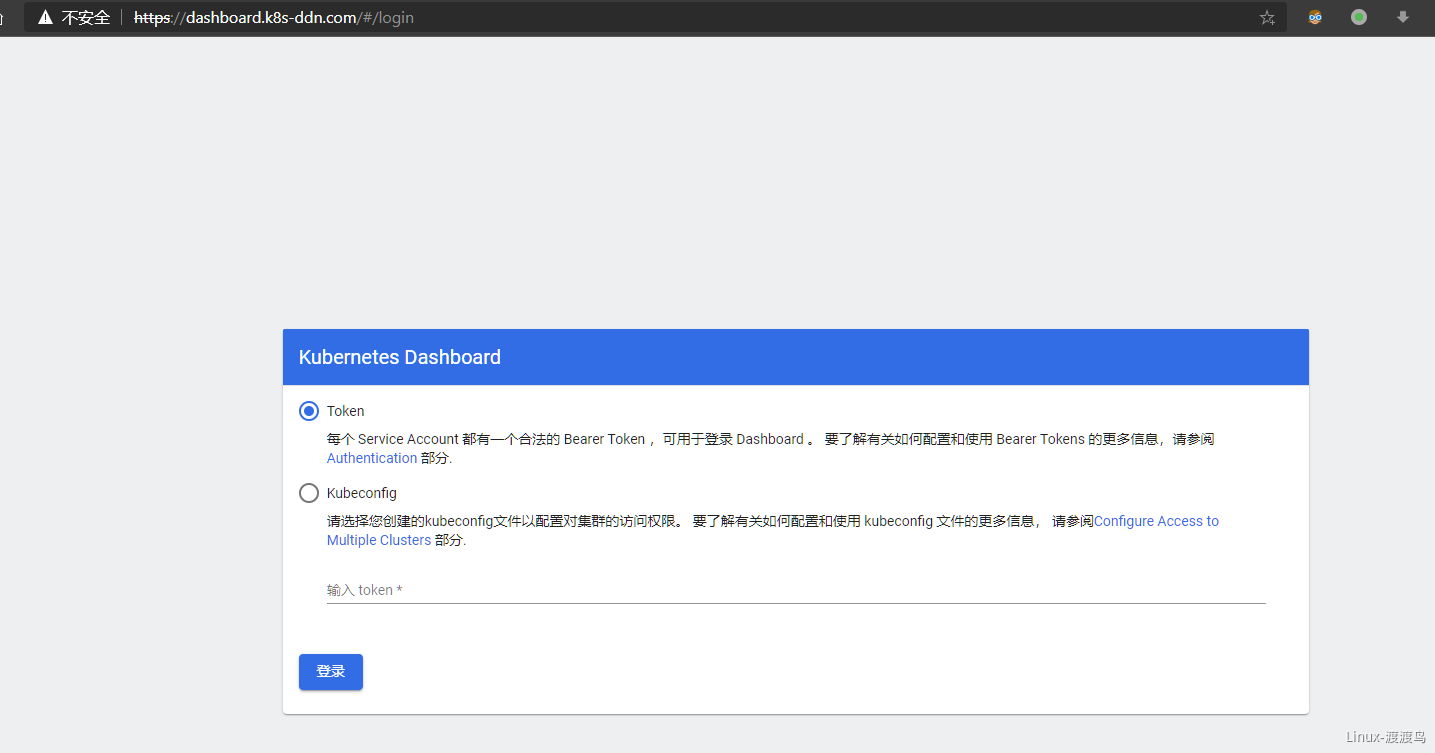

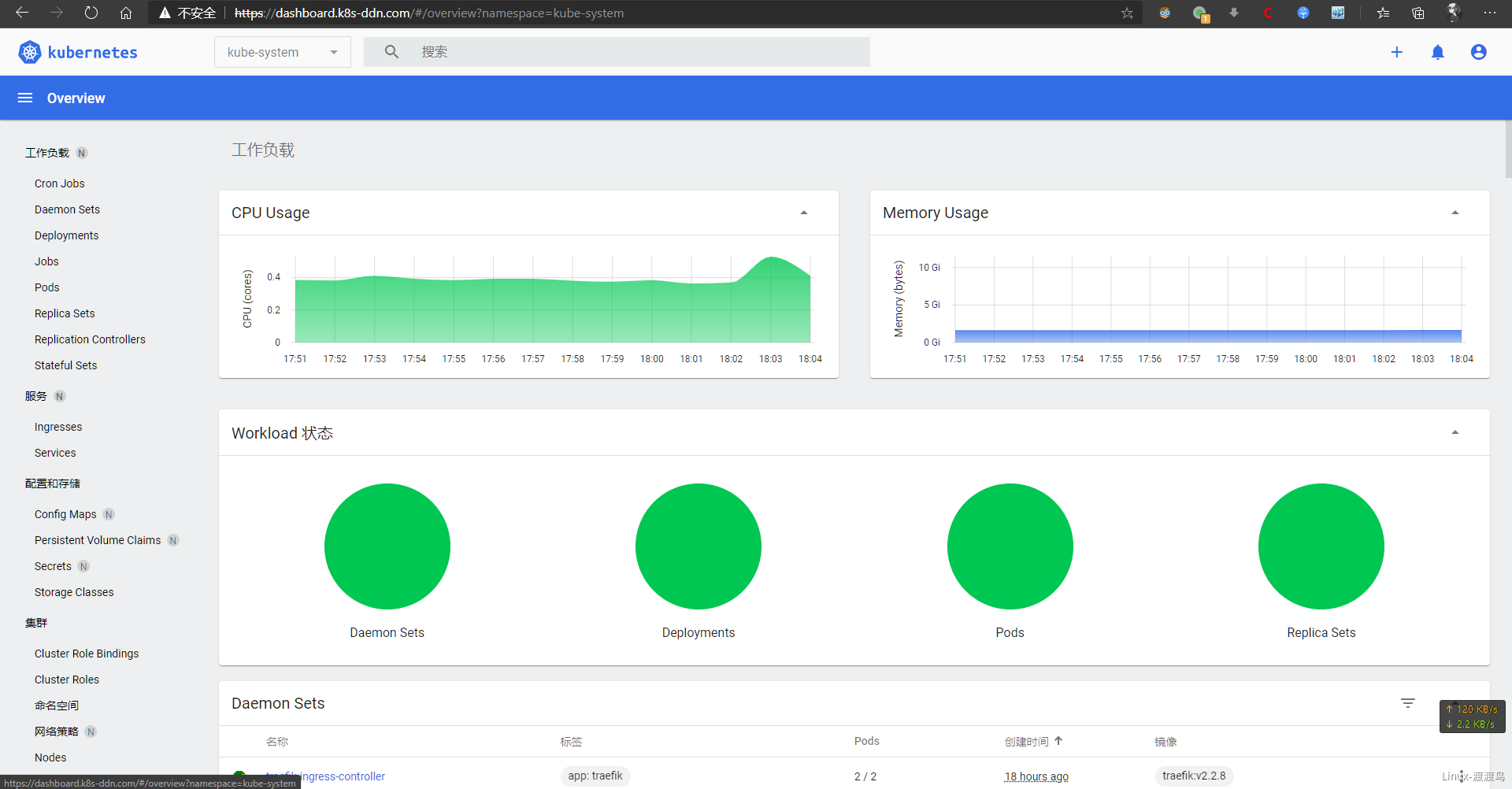

2.4.4. 安装dashboard

dashboard默认的yaml是采用https接口,本实验中前端SLB采用http与后端集群中ingress通信,因此需要对该Yaml文件进行改造,否则会出现以客户端http方式请求https接口的报错。dashboard的github主页:https://github.com/kubernetes/dashboard

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: Namespace

metadata:

name: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

ports:

- port: 443

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kubernetes-dashboard

type: Opaque

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-csrf

namespace: kubernetes-dashboard

type: Opaque

data:

csrf: ""

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-key-holder

namespace: kubernetes-dashboard

type: Opaque

---

kind: ConfigMap

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-settings

namespace: kubernetes-dashboard

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

rules:

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster", "dashboard-metrics-scraper"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"]

verbs: ["get"]

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

rules:

# Allow Metrics Scraper to get metrics from the Metrics server

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: kubernetesui/dashboard:v2.0.5

imagePullPolicy: Always

ports:

# - containerPort: 8443

# protocol: TCP

- containerPort: 8080

protocol: TCP

args:

- --insecure-port=8080

- --enable-insecure-login=true

# - --auto-generate-certificates

- --namespace=kubernetes-dashboard

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

# - name: kubernetes-dashboard-certs

# mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 8080

# scheme: HTTPS

# path: /

# port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

volumes:

# - name: kubernetes-dashboard-certs

# secret:

# secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

ports:

- port: 80

targetPort: 8080

selector:

k8s-app: kubernetes-dashboard

---

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

rules:

- host: dashboard.k8s-ddn.com

http:

paths:

- backend:

serviceName: kubernetes-dashboard

servicePort: 80

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

ports:

- port: 8000

targetPort: 8000

selector:

k8s-app: dashboard-metrics-scraper

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: dashboard-metrics-scraper

template:

metadata:

labels:

k8s-app: dashboard-metrics-scraper

annotations:

seccomp.security.alpha.kubernetes.io/pod: 'runtime/default'

spec:

containers:

- name: dashboard-metrics-scraper

image: kubernetesui/metrics-scraper:v1.0.6

ports:

- containerPort: 8000

protocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 8000

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- mountPath: /tmp

name: tmp-volume

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

volumes:

- name: tmp-volume

emptyDir: {}

[root@centos-7-51 ~]# kubectl apply -f /tmp/dashborad.yaml

# admin.yaml, 管理员账号

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

addonmanager.kubernetes.io/mode: Reconcile

name: kubernetes-dashboard-admin

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard-admin

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

addonmanager.kubernetes.io/mode: Reconcile

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard-admin

namespace: kube-system

# 使用管理员的token进行测试

[root@centos-7-51 ~]# kubectl apply -f /tmp/admin.yaml

[root@centos-7-51 ~]# kubectl get secret -n kube-system| grep kubernetes-dashboard-admin

kubernetes-dashboard-admin-token-fnp6v kubernetes.io/service-account-token 3 69s

[root@centos-7-51 ~]# kubectl describe secret -n kube-system kubernetes-dashboard-admin-token-fnp6v | grep token

Name: kubernetes-dashboard-admin-token-fnp6v

Type: kubernetes.io/service-account-token

token: eyJhbGciOiJSUzI1NiIsImtpZCI6InlsYXNzWkZ4OU5kMXhqcGgxZkIzdkJqbjBid05oVHp1bjF4TWRKRURkM2cifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC1hZG1pbi10b2tlbi1mbnA2diIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC1hZG1pbiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6ImU3YmExY2I1LTM5MWQtNDhkMy1iNzBmLTg1MTIwODEzM2YxYyIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlLXN5c3RlbTprdWJlcm5ldGVzLWRhc2hib2FyZC1hZG1pbiJ9.SW7ztjjepblrPla2q6pGa0WOytF6BVKHMigakUZLKinWlntX0uOD12Q76CBMjV6_7TC2ho2FJSV3GyfDkyHZujrGUApNHsxxcjUxqFOcrfuq2yoOyrF-x_REeAxKoNlDiW0s-zQYL7w97OF2BV83ecmd-fZ1kcaAgT3n-mmgDwYTBJtnPKLbo59MoFL1DcXDsUilRPKb4-FnlCju72qznbsudKKo4NOKqIrnl6uTNEdyuDvX2c_pIXZ0AsGnVOeu6Qv9I-e3INHPulx1zZ0oz4a_zk1wRFLCM0ycKzYK_PTkjUXc9OsDYWL4iFEavhYNUWZbXnUihg3tRtfrTGvBIA

2.4.5. stakater Reloader

2.4.6. node local dns cache

3. 集群管理

3.1. 节点扩容

当前集群已经存在三个master,正常情况下,足以承担几百台的Node工作负载,因此此处仅做 node 扩容演示。

3.1.1. 扩容前准备

- 完成1.2中8项检查

- 安装docker-ce

- 安装kubelet, kubeadm

- 安装nfs-utils

3.1.2. 扩容节点

# kubeadm的token是存在有效期的,可以通过 kubeadm token create|delete|list 进行管理

[root@centos-7-51 ~]# kubeadm token list

TOKEN TTL EXPIRES USAGES DESCRIPTION EXTRA GROUPS

j8eck0.u5vu8pxb63eq9bxb 1h 2020-12-05T21:49:44+08:00 authentication,signing The default bootstrap token generated by 'kubeadm init'. system:bootstrappers:kubeadm:default-node-token

# 获取证书的hash

[root@centos-7-51 ~]# openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | awk '{print $2}'

6cdf0e0c66e095beca8b7eadb74da19aa904b45e2378bf53172c7f633c0dc9e8

[root@centos-7-56 ~]# kubeadm join 10.4.7.59:6443 --token j8eck0.u5vu8pxb63eq9bxb --discovery-token-ca-cert-hash sha256:6cdf0e0c66e095beca8b7eadb74da19aa904b45e2378bf53172c7f633c0dc9e8

[root@centos-7-51 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

centos-7-51 Ready master 22h v1.17.14

centos-7-52 Ready master 22h v1.17.14

centos-7-53 Ready master 22h v1.17.14

centos-7-54 Ready <none> 22h v1.17.14

centos-7-55 Ready <none> 22h v1.17.14

centos-7-56 Ready <none> 4m38s v1.17.14

3.2. 节点下线

3.2.1. 环境模拟

为了模拟生产环境,创建三个deployment,每组10个副本。

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deploy

namespace: default

spec:

replicas: 10

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx-pod

image: nginx:latest

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: busybox

namespace: default

spec:

replicas: 10

selector:

matchLabels:

app: busybox

template:

metadata:

labels:

app: busybox

spec:

containers:

- name: busybox-pod

image: busybox:latest

command:

- sleep

- "3600"

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: os

namespace: default

spec:

replicas: 10

selector:

matchLabels:

app: centos

template:

metadata:

labels:

app: centos

spec:

containers:

- name: centos-pod

image: centos:7

command:

- sleep

- "3600"

---

[root@centos-7-51 ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

busybox-64dbb4f97f-45v8x 1/1 Running 0 107s 172.16.3.14 centos-7-54 <none> <none>

busybox-64dbb4f97f-4d2xx 1/1 Running 0 107s 172.16.4.15 centos-7-55 <none> <none>

busybox-64dbb4f97f-5pq8g 1/1 Running 0 107s 172.16.5.4 centos-7-56 <none> <none>

busybox-64dbb4f97f-9tkwg 1/1 Running 0 107s 172.16.5.9 centos-7-56 <none> <none>

busybox-64dbb4f97f-fffqp 1/1 Running 0 107s 172.16.5.7 centos-7-56 <none> <none>

busybox-64dbb4f97f-hr25g 1/1 Running 0 107s 172.16.4.21 centos-7-55 <none> <none>

busybox-64dbb4f97f-jj2gt 1/1 Running 0 107s 172.16.3.17 centos-7-54 <none> <none>

busybox-64dbb4f97f-km2gj 1/1 Running 0 107s 172.16.4.19 centos-7-55 <none> <none>

busybox-64dbb4f97f-ndqsm 1/1 Running 0 107s 172.16.3.20 centos-7-54 <none> <none>

busybox-64dbb4f97f-rh97p 1/1 Running 0 107s 172.16.4.18 centos-7-55 <none> <none>

nginx-deploy-646dfb87b-4lmgj 1/1 Running 0 107s 172.16.3.15 centos-7-54 <none> <none>

nginx-deploy-646dfb87b-52cqf 1/1 Running 0 107s 172.16.5.6 centos-7-56 <none> <none>

nginx-deploy-646dfb87b-7xv98 1/1 Running 0 107s 172.16.3.21 centos-7-54 <none> <none>

nginx-deploy-646dfb87b-ghr7d 1/1 Running 0 107s 172.16.4.14 centos-7-55 <none> <none>

nginx-deploy-646dfb87b-h2mxc 1/1 Running 0 107s 172.16.5.12 centos-7-56 <none> <none>

nginx-deploy-646dfb87b-h98ns 1/1 Running 0 107s 172.16.5.10 centos-7-56 <none> <none>

nginx-deploy-646dfb87b-rsg4k 1/1 Running 0 107s 172.16.4.12 centos-7-55 <none> <none>

nginx-deploy-646dfb87b-szqpc 1/1 Running 0 107s 172.16.3.22 centos-7-54 <none> <none>

nginx-deploy-646dfb87b-wltv4 1/1 Running 0 107s 172.16.5.3 centos-7-56 <none> <none>

nginx-deploy-646dfb87b-xzg4q 1/1 Running 0 107s 172.16.4.17 centos-7-55 <none> <none>

os-7df59bfb4f-5xn7h 1/1 Running 0 107s 172.16.5.11 centos-7-56 <none> <none>

os-7df59bfb4f-7k2xq 1/1 Running 0 106s 172.16.4.11 centos-7-55 <none> <none>

os-7df59bfb4f-7pv59 1/1 Running 0 107s 172.16.4.20 centos-7-55 <none> <none>

os-7df59bfb4f-bkmcv 1/1 Running 0 107s 172.16.3.16 centos-7-54 <none> <none>

os-7df59bfb4f-bt7gg 1/1 Running 0 107s 172.16.3.19 centos-7-54 <none> <none>

os-7df59bfb4f-cv2x2 1/1 Running 0 107s 172.16.5.5 centos-7-56 <none> <none>

os-7df59bfb4f-jwrgm 1/1 Running 0 107s 172.16.5.8 centos-7-56 <none> <none>

os-7df59bfb4f-mfmzq 1/1 Running 0 106s 172.16.4.13 centos-7-55 <none> <none>

os-7df59bfb4f-t6lh4 1/1 Running 0 106s 172.16.3.18 centos-7-54 <none> <none>

os-7df59bfb4f-whqdn 1/1 Running 0 107s 172.16.4.16 centos-7-55 <none> <none>

3.2.2. 删除节点

[root@centos-7-51 ~]# kubectl drain centos-7-56 --delete-local-data --force --ignore-daemonsets # 驱逐pod

node/centos-7-56 cordoned

evicting pod "os-7df59bfb4f-jwrgm"

evicting pod "busybox-64dbb4f97f-5pq8g"

evicting pod "busybox-64dbb4f97f-9tkwg"

evicting pod "busybox-64dbb4f97f-fffqp"

evicting pod "nginx-deploy-646dfb87b-52cqf"

evicting pod "nginx-deploy-646dfb87b-h2mxc"

evicting pod "nginx-deploy-646dfb87b-h98ns"

evicting pod "nginx-deploy-646dfb87b-wltv4"

evicting pod "os-7df59bfb4f-5xn7h"

evicting pod "os-7df59bfb4f-cv2x2"

pod/nginx-deploy-646dfb87b-52cqf evicted

pod/nginx-deploy-646dfb87b-wltv4 evicted

pod/nginx-deploy-646dfb87b-h2mxc evicted

pod/nginx-deploy-646dfb87b-h98ns evicted

pod/os-7df59bfb4f-jwrgm evicted

pod/busybox-64dbb4f97f-5pq8g evicted

pod/busybox-64dbb4f97f-9tkwg evicted

pod/os-7df59bfb4f-5xn7h evicted

pod/busybox-64dbb4f97f-fffqp evicted

pod/os-7df59bfb4f-cv2x2 evicted

node/centos-7-56 evicted

[root@centos-7-51 ~]# kubectl get pod -A -o wide | grep centos-7-56

kube-system kube-flannel-ds-944j9 1/1 Running 0 15m 10.4.7.56 centos-7-56 <none> <none>

kube-system kube-proxy-rjlc7 1/1 Running 0 19m 10.4.7.56 centos-7-56 <none> <none>

kube-system traefik-ingress-controller-w8w2c 1/1 Running 0 15m 172.16.5.2 centos-7-56 <none> <none>

[root@centos-7-56 ~]# kubeadm reset # 重置节点

[root@centos-7-56 ~]# iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X

[root@centos-7-56 ~]# ipvsadm -C # 清除ipvs

[root@centos-7-56 ~]# rm -fr /etc/cni/net.d # 删除cni配置

[root@centos-7-51 ~]# kubectl delete node centos-7-56 # 删除节点

3.3. 集群升级

在实际的运维中,kubernetes的版本需要不定期的进行升级,有两种方式的升级,一种是次版本升级,如 1.17.x 升级到 1.18.x。另一种是补丁版本升级,如 1.17.x 升级到 1.17.y 。需要注意的是,在次版本升级中,不能跨版本,即不能从 1.y.x 升级到 1.y+2.x !本实验将集群从 1.17 升级到 1.18。参考文档: https://v1-18.docs.kubernetes.io/zh/docs/tasks/administer-cluster/kubeadm/kubeadm-upgrade. kubernetes 1.18 的发行页:https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.18.md,在发行页没有看到对docker版本支持的变化,本次实验不升级docker版本。

[root@centos-7-51 ~]# yum list --showduplicates kubeadm | grep 1.18 # 升级到最新的 1.18.12

kubeadm.x86_64 1.18.0-0 kubernetes

kubeadm.x86_64 1.18.1-0 kubernetes

kubeadm.x86_64 1.18.2-0 kubernetes

kubeadm.x86_64 1.18.3-0 kubernetes

kubeadm.x86_64 1.18.4-0 kubernetes

kubeadm.x86_64 1.18.4-1 kubernetes

kubeadm.x86_64 1.18.5-0 kubernetes

kubeadm.x86_64 1.18.6-0 kubernetes

kubeadm.x86_64 1.18.8-0 kubernetes

kubeadm.x86_64 1.18.9-0 kubernetes

kubeadm.x86_64 1.18.10-0 kubernetes

kubeadm.x86_64 1.18.12-0 kubernetes

3.3.1. 升级第一台master

[root@centos-7-51 ~]# yum install -y kubeadm-1.18.12-0

[root@centos-7-51 ~]# kubeadm version # 验证版本

kubeadm version: &version.Info{Major:"1", Minor:"18", GitVersion:"v1.18.12", GitCommit:"7cd5e9086de8ae25d6a1514d0c87bac67ca4a481", GitTreeState:"clean", BuildDate:"2020-11-12T09:16:40Z", GoVersion:"go1.13.15", Compiler:"gc", Platform:"linux/amd64"}

[root@centos-7-51 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

centos-7-51 Ready master 35h v1.17.14

centos-7-52 Ready master 35h v1.17.14

centos-7-53 Ready master 35h v1.17.14

centos-7-54 Ready <none> 35h v1.17.14

centos-7-55 Ready <none> 35h v1.17.14

[root@centos-7-51 ~]# kubectl drain centos-7-51 --ignore-daemonsets # 腾出centos-7-51

node/centos-7-51 cordoned

node/centos-7-51 drained

[root@centos-7-51 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

centos-7-51 Ready,SchedulingDisabled master 35h v1.17.14

centos-7-52 Ready master 35h v1.17.14

centos-7-53 Ready master 35h v1.17.14

centos-7-54 Ready <none> 35h v1.17.14

centos-7-55 Ready <none> 35h v1.17.14

[root@centos-7-51 ~]# kubectl get pod -A -o wide | grep centos-7-51 # 这里的dashboard是因为指定了允许部署在master,升级中可以忽略

kube-system etcd-centos-7-51 1/1 Running 1 35h 10.4.7.51 centos-7-51 <none> <none>

kube-system kube-apiserver-centos-7-51 1/1 Running 1 35h 10.4.7.51 centos-7-51 <none> <none>

kube-system kube-controller-manager-centos-7-51 1/1 Running 2 35h 10.4.7.51 centos-7-51 <none> <none>

kube-system kube-flannel-ds-c2bs6 1/1 Running 1 34h 10.4.7.51 centos-7-51 <none> <none>

kube-system kube-proxy-f6bmd 1/1 Running 0 13h 10.4.7.51 centos-7-51 <none> <none>

kube-system kube-scheduler-centos-7-51 1/1 Running 1 35h 10.4.7.51 centos-7-51 <none> <none>

kubernetes-dashboard kubernetes-dashboard-ffb9ffc86-2np2t 1/1 Running 0 15h 172.16.0.3 centos-7-51 <none> <none>

[root@centos-7-51 ~]# kubeadm upgrade plan # 检查升级计划

[upgrade/config] Making sure the configuration is correct:

[upgrade/config] Reading configuration from the cluster...

[upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[preflight] Running pre-flight checks.

[upgrade] Running cluster health checks

[upgrade] Fetching available versions to upgrade to

[upgrade/versions] Cluster version: v1.17.14

[upgrade/versions] kubeadm version: v1.18.12

I1206 09:27:00.744351 33247 version.go:252] remote version is much newer: v1.19.4; falling back to: stable-1.18

[upgrade/versions] Latest stable version: v1.18.12

[upgrade/versions] Latest stable version: v1.18.12

[upgrade/versions] Latest version in the v1.17 series: v1.17.14

[upgrade/versions] Latest version in the v1.17 series: v1.17.14

Components that must be upgraded manually after you have upgraded the control plane with 'kubeadm upgrade apply':

COMPONENT CURRENT AVAILABLE

Kubelet 5 x v1.17.14 v1.18.12

Upgrade to the latest stable version:

COMPONENT CURRENT AVAILABLE

API Server v1.17.14 v1.18.12

Controller Manager v1.17.14 v1.18.12

Scheduler v1.17.14 v1.18.12

Kube Proxy v1.17.14 v1.18.12

CoreDNS 1.6.5 1.6.7

Etcd 3.4.3 3.4.3-0

You can now apply the upgrade by executing the following command:

kubeadm upgrade apply v1.18.12

# 升级中会自动替换证书,并且拉取的镜像repository和初始化时一致

[root@centos-7-51 ~]# kubeadm upgrade apply v1.18.12

[upgrade/config] Making sure the configuration is correct:

[upgrade/config] Reading configuration from the cluster...

[upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[preflight] Running pre-flight checks.

[upgrade] Running cluster health checks

[upgrade/version] You have chosen to change the cluster version to "v1.18.12"

[upgrade/versions] Cluster version: v1.17.14

[upgrade/versions] kubeadm version: v1.18.12

[upgrade/confirm] Are you sure you want to proceed with the upgrade? [y/N]: y

[upgrade/prepull] Will prepull images for components [kube-apiserver kube-controller-manager kube-scheduler etcd]

[upgrade/prepull] Prepulling image for component etcd.

[upgrade/prepull] Prepulling image for component kube-apiserver.

[upgrade/prepull] Prepulling image for component kube-controller-manager.

[upgrade/prepull] Prepulling image for component kube-scheduler.

[apiclient] Found 0 Pods for label selector k8s-app=upgrade-prepull-etcd

[apiclient] Found 3 Pods for label selector k8s-app=upgrade-prepull-kube-controller-manager

[apiclient] Found 3 Pods for label selector k8s-app=upgrade-prepull-kube-apiserver

[apiclient] Found 0 Pods for label selector k8s-app=upgrade-prepull-kube-scheduler

[apiclient] Found 3 Pods for label selector k8s-app=upgrade-prepull-etcd

[apiclient] Found 3 Pods for label selector k8s-app=upgrade-prepull-kube-scheduler

[upgrade/prepull] Prepulled image for component etcd.

[upgrade/prepull] Prepulled image for component kube-apiserver.

[upgrade/prepull] Prepulled image for component kube-scheduler.

[upgrade/prepull] Prepulled image for component kube-controller-manager.

[upgrade/prepull] Successfully prepulled the images for all the control plane components

[upgrade/apply] Upgrading your Static Pod-hosted control plane to version "v1.18.12"...

Static pod: kube-apiserver-centos-7-51 hash: d66ea877d4c9baceca5434f6c40e56c3

Static pod: kube-controller-manager-centos-7-51 hash: 3b690a04ed3bb330968e5355fcb77dfe

Static pod: kube-scheduler-centos-7-51 hash: 92ea98b5d89a947c103103de725f5e88

[upgrade/etcd] Upgrading to TLS for etcd

[upgrade/etcd] Non fatal issue encountered during upgrade: the desired etcd version for this Kubernetes version "v1.18.12" is "3.4.3-0", but the current etcd version is "3.4.3". Won't downgrade etcd, instead just continue

[upgrade/staticpods] Writing new Static Pod manifests to "/etc/kubernetes/tmp/kubeadm-upgraded-manifests011210605"

W1206 09:31:26.483819 35018 manifests.go:225] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC"

[upgrade/staticpods] Preparing for "kube-apiserver" upgrade

[upgrade/staticpods] Renewing apiserver certificate

[upgrade/staticpods] Renewing apiserver-kubelet-client certificate

[upgrade/staticpods] Renewing front-proxy-client certificate

[upgrade/staticpods] Renewing apiserver-etcd-client certificate

[upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-apiserver.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2020-12-06-09-31-24/kube-apiserver.yaml"

[upgrade/staticpods] Waiting for the kubelet to restart the component

[upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s)

Static pod: kube-apiserver-centos-7-51 hash: d66ea877d4c9baceca5434f6c40e56c3

Static pod: kube-apiserver-centos-7-51 hash: d66ea877d4c9baceca5434f6c40e56c3

Static pod: kube-apiserver-centos-7-51 hash: d176ad7b601e72e4540b622eb6333914

[apiclient] Found 3 Pods for label selector component=kube-apiserver

[upgrade/staticpods] Component "kube-apiserver" upgraded successfully!

[upgrade/staticpods] Preparing for "kube-controller-manager" upgrade

[upgrade/staticpods] Renewing controller-manager.conf certificate

[upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-controller-manager.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2020-12-06-09-31-24/kube-controller-manager.yaml"

[upgrade/staticpods] Waiting for the kubelet to restart the component

[upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s)

Static pod: kube-controller-manager-centos-7-51 hash: 3b690a04ed3bb330968e5355fcb77dfe

Static pod: kube-controller-manager-centos-7-51 hash: 51215cf52dca86b9d58c8d50b2498fbe

[apiclient] Found 3 Pods for label selector component=kube-controller-manager

[upgrade/staticpods] Component "kube-controller-manager" upgraded successfully!

[upgrade/staticpods] Preparing for "kube-scheduler" upgrade

[upgrade/staticpods] Renewing scheduler.conf certificate