In the previous homework you implemented a fully-connected two-layer neural network on CIFAR-10. The implementation was simple but not very modular since the loss and gradient were computed in a single monolithic function. This is manageable for a simple two-layer network, but would become impractical as we move to bigger models. Ideally we want to build networks using a more modular design so that we can implement different layer types in isolation and then snap them together into models with different architectures.

In this exercise we will implement fully-connected networks using a more modular approach. For each layer we will implement a forward and a backward function. The forward function will receive inputs, weights, and other parameters and will return both an output and a cache object storing data needed for the backward pass, like this:

def layer_forward(x, w):""" Receive inputs x and weights w """# Do some computations ...z = # ... some intermediate value# Do some more computations ...out = # the outputcache = (x, w, z, out) # Values we need to compute gradientsreturn out, cache

The backward pass will receive upstream derivatives and the cache object, and will return gradients with respect to the inputs and weights, like this:

def layer_backward(dout, cache):"""Receive dout (derivative of loss with respect to outputs) and cache,and compute derivative with respect to inputs."""# Unpack cache valuesx, w, z, out = cache# Use values in cache to compute derivativesdx = # Derivative of loss with respect to xdw = # Derivative of loss with respect to wreturn dx, dw

After implementing a bunch of layers this way, we will be able to easily combine them to build classifiers with different architectures.

In addition to implementing fully-connected networks of arbitrary depth, we will also explore different update rules for optimization, and introduce Dropout as a regularizer and Batch/Layer Normalization as a tool to more efficiently optimize deep networks.

# As usual, a bit of setupfrom __future__ import print_functionimport timeimport numpy as npimport matplotlib.pyplot as pltfrom cs231n.classifiers.fc_net import *from cs231n.data_utils import get_CIFAR10_datafrom cs231n.gradient_check import eval_numerical_gradient, eval_numerical_gradient_arrayfrom cs231n.solver import Solver%matplotlib inlineplt.rcParams['figure.figsize'] = (10.0, 8.0) # set default size of plotsplt.rcParams['image.interpolation'] = 'nearest'plt.rcParams['image.cmap'] = 'gray'# for auto-reloading external modules# see http://stackoverflow.com/questions/1907993/autoreload-of-modules-in-ipython%load_ext autoreload%autoreload 2def rel_error(x, y):""" returns relative error """return np.max(np.abs(x - y) / (np.maximum(1e-8, np.abs(x) + np.abs(y))))

run the following from the cs231n directory and try again:python setup.py build_ext --inplaceYou may also need to restart your iPython kernel

# Load the (preprocessed) CIFAR10 data.data = get_CIFAR10_data()for k, v in list(data.items()):print(('%s: ' % k, v.shape))

('X_train: ', (49000, 3, 32, 32))('y_train: ', (49000,))('X_val: ', (1000, 3, 32, 32))('y_val: ', (1000,))('X_test: ', (1000, 3, 32, 32))('y_test: ', (1000,))

Affine layer: foward

Open the file cs231n/layers.py and implement the affine_forward function.

Once you are done you can test your implementaion by running the following:

# Test the affine_forward functionnum_inputs = 2input_shape = (4, 5, 6)output_dim = 3input_size = num_inputs * np.prod(input_shape)weight_size = output_dim * np.prod(input_shape)x = np.linspace(-0.1, 0.5, num=input_size).reshape(num_inputs, *input_shape)w = np.linspace(-0.2, 0.3, num=weight_size).reshape(np.prod(input_shape), output_dim)b = np.linspace(-0.3, 0.1, num=output_dim)out, _ = affine_forward(x, w, b)correct_out = np.array([[ 1.49834967, 1.70660132, 1.91485297],[ 3.25553199, 3.5141327, 3.77273342]])# Compare your output with ours. The error should be around e-9 or less.print('Testing affine_forward function:')print('difference: ', rel_error(out, correct_out))

Testing affine_forward function:difference: 9.769849468192957e-10

Affine layer: backward

Now implement the affine_backward function and test your implementation using numeric gradient checking.

# Test the affine_backward functionnp.random.seed(231)x = np.random.randn(10, 2, 3)w = np.random.randn(6, 5)b = np.random.randn(5)dout = np.random.randn(10, 5)dx_num = eval_numerical_gradient_array(lambda x: affine_forward(x, w, b)[0], x, dout)dw_num = eval_numerical_gradient_array(lambda w: affine_forward(x, w, b)[0], w, dout)db_num = eval_numerical_gradient_array(lambda b: affine_forward(x, w, b)[0], b, dout)_, cache = affine_forward(x, w, b)dx, dw, db = affine_backward(dout, cache)# The error should be around e-10 or lessprint('Testing affine_backward function:')print('dx error: ', rel_error(dx_num, dx))print('dw error: ', rel_error(dw_num, dw))print('db error: ', rel_error(db_num, db))

Testing affine_backward function:dx error: 5.399100368651805e-11dw error: 9.904211865398145e-11db error: 2.4122867568119087e-11

ReLU activation: forward

Implement the forward pass for the ReLU activation function in the relu_forward function and test your implementation using the following:

# Test the relu_forward functionx = np.linspace(-0.5, 0.5, num=12).reshape(3, 4)out, _ = relu_forward(x)correct_out = np.array([[ 0., 0., 0., 0., ],[ 0., 0., 0.04545455, 0.13636364,],[ 0.22727273, 0.31818182, 0.40909091, 0.5, ]])# Compare your output with ours. The error should be on the order of e-8print('Testing relu_forward function:')print('difference: ', rel_error(out, correct_out))

Testing relu_forward function:difference: 4.999999798022158e-08

ReLU activation: backward

Now implement the backward pass for the ReLU activation function in the relu_backward function and test your implementation using numeric gradient checking:

np.random.seed(231)x = np.random.randn(10, 10)dout = np.random.randn(*x.shape)dx_num = eval_numerical_gradient_array(lambda x: relu_forward(x)[0], x, dout)_, cache = relu_forward(x)dx = relu_backward(dout, cache)# The error should be on the order of e-12print('Testing relu_backward function:')print('dx error: ', rel_error(dx_num, dx))

Testing relu_backward function:dx error: 3.2756349136310288e-12

Inline Question 1:

We’ve only asked you to implement ReLU, but there are a number of different activation functions that one could use in neural networks, each with its pros and cons. In particular, an issue commonly seen with activation functions is getting zero (or close to zero) gradient flow during backpropagation. Which of the following activation functions have this problem? If you consider these functions in the one dimensional case, what types of input would lead to this behaviour?

- Sigmoid

- ReLU

- Leaky ReLU

Answer:

a. Sigmoid

b. The input whose gradient become nearly zero when the input become larger would lead to this behaviour.

“Sandwich” layers

There are some common patterns of layers that are frequently used in neural nets. For example, affine layers are frequently followed by a ReLU nonlinearity. To make these common patterns easy, we define several convenience layers in the file cs231n/layer_utils.py.

For now take a look at the affine_relu_forward and affine_relu_backward functions, and run the following to numerically gradient check the backward pass:

from cs231n.layer_utils import affine_relu_forward, affine_relu_backwardnp.random.seed(231)x = np.random.randn(2, 3, 4)w = np.random.randn(12, 10)b = np.random.randn(10)dout = np.random.randn(2, 10)out, cache = affine_relu_forward(x, w, b)dx, dw, db = affine_relu_backward(dout, cache)dx_num = eval_numerical_gradient_array(lambda x: affine_relu_forward(x, w, b)[0], x, dout)dw_num = eval_numerical_gradient_array(lambda w: affine_relu_forward(x, w, b)[0], w, dout)db_num = eval_numerical_gradient_array(lambda b: affine_relu_forward(x, w, b)[0], b, dout)# Relative error should be around e-10 or lessprint('Testing affine_relu_forward and affine_relu_backward:')print('dx error: ', rel_error(dx_num, dx))print('dw error: ', rel_error(dw_num, dw))print('db error: ', rel_error(db_num, db))

Testing affine_relu_forward and affine_relu_backward:dx error: 2.299579177309368e-11dw error: 8.162011105764925e-11db error: 7.826724021458994e-12

Loss layers: Softmax and SVM

You implemented these loss functions in the last assignment, so we’ll give them to you for free here. You should still make sure you understand how they work by looking at the implementations in cs231n/layers.py.

You can make sure that the implementations are correct by running the following:

np.random.seed(231)num_classes, num_inputs = 10, 50x = 0.001 * np.random.randn(num_inputs, num_classes)y = np.random.randint(num_classes, size=num_inputs)dx_num = eval_numerical_gradient(lambda x: svm_loss(x, y)[0], x, verbose=False)loss, dx = svm_loss(x, y)# Test svm_loss function. Loss should be around 9 and dx error should be around the order of e-9print('Testing svm_loss:')print('loss: ', loss)print('dx error: ', rel_error(dx_num, dx))dx_num = eval_numerical_gradient(lambda x: softmax_loss(x, y)[0], x, verbose=False)loss, dx = softmax_loss(x, y)# Test softmax_loss function. Loss should be close to 2.3 and dx error should be around e-8print('\nTesting softmax_loss:')print('loss: ', loss)print('dx error: ', rel_error(dx_num, dx))

Testing svm_loss:loss: 8.999602749096233dx error: 1.4021566006651672e-09Testing softmax_loss:loss: 2.302545844500738dx error: 9.384673161989355e-09

Two-layer network

In the previous assignment you implemented a two-layer neural network in a single monolithic class. Now that you have implemented modular versions of the necessary layers, you will reimplement the two layer network using these modular implementations.

Open the file cs231n/classifiers/fc_net.py and complete the implementation of the TwoLayerNet class. This class will serve as a model for the other networks you will implement in this assignment, so read through it to make sure you understand the API. You can run the cell below to test your implementation.

np.random.seed(231)N, D, H, C = 3, 5, 50, 7X = np.random.randn(N, D)y = np.random.randint(C, size=N)std = 1e-3model = TwoLayerNet(input_dim=D, hidden_dim=H, num_classes=C, weight_scale=std)print('Testing initialization ... ')W1_std = abs(model.params['W1'].std() - std)b1 = model.params['b1']W2_std = abs(model.params['W2'].std() - std)b2 = model.params['b2']assert W1_std < std / 10, 'First layer weights do not seem right'assert np.all(b1 == 0), 'First layer biases do not seem right'assert W2_std < std / 10, 'Second layer weights do not seem right'assert np.all(b2 == 0), 'Second layer biases do not seem right'print('Testing test-time forward pass ... ')model.params['W1'] = np.linspace(-0.7, 0.3, num=D*H).reshape(D, H)model.params['b1'] = np.linspace(-0.1, 0.9, num=H)model.params['W2'] = np.linspace(-0.3, 0.4, num=H*C).reshape(H, C)model.params['b2'] = np.linspace(-0.9, 0.1, num=C)X = np.linspace(-5.5, 4.5, num=N*D).reshape(D, N).Tscores = model.loss(X)correct_scores = np.asarray([[11.53165108, 12.2917344, 13.05181771, 13.81190102, 14.57198434, 15.33206765, 16.09215096],[12.05769098, 12.74614105, 13.43459113, 14.1230412, 14.81149128, 15.49994135, 16.18839143],[12.58373087, 13.20054771, 13.81736455, 14.43418138, 15.05099822, 15.66781506, 16.2846319 ]])scores_diff = np.abs(scores - correct_scores).sum()assert scores_diff < 1e-6, 'Problem with test-time forward pass'print('Testing training loss (no regularization)')y = np.asarray([0, 5, 1])loss, grads = model.loss(X, y)correct_loss = 3.4702243556assert abs(loss - correct_loss) < 1e-10, 'Problem with training-time loss'model.reg = 1.0loss, grads = model.loss(X, y)correct_loss = 26.5948426952assert abs(loss - correct_loss) < 1e-10, 'Problem with regularization loss'# Errors should be around e-7 or lessfor reg in [0.0, 0.7]:print('Running numeric gradient check with reg = ', reg)model.reg = regloss, grads = model.loss(X, y)for name in sorted(grads):f = lambda _: model.loss(X, y)[0]grad_num = eval_numerical_gradient(f, model.params[name], verbose=False)print('%s relative error: %.2e' % (name, rel_error(grad_num, grads[name])))

Testing initialization ...Testing test-time forward pass ...Testing training loss (no regularization)Running numeric gradient check with reg = 0.0W1 relative error: 1.83e-08W2 relative error: 3.12e-10b1 relative error: 9.83e-09b2 relative error: 4.33e-10Running numeric gradient check with reg = 0.7W1 relative error: 2.53e-07W2 relative error: 2.85e-08b1 relative error: 1.56e-08b2 relative error: 7.76e-10

Solver

In the previous assignment, the logic for training models was coupled to the models themselves. Following a more modular design, for this assignment we have split the logic for training models into a separate class.

Open the file cs231n/solver.py and read through it to familiarize yourself with the API. After doing so, use a Solver instance to train a TwoLayerNet that achieves at least 50% accuracy on the validation set.

model = TwoLayerNet()solver = None############################################################################### TODO: Use a Solver instance to train a TwoLayerNet that achieves at least ## 50% accuracy on the validation set. ################################################################################ *****START OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****lrs = np.linspace(1e-6, 1e-2, 8)solver = Solver(model, data,update_rule='sgd',optim_config={'learning_rate': 1e-3},lr_decay=0.95,num_epochs=8, batch_size=100,print_every=100)for lr in lrs:solver.optim_config['learning_rate'] = lrsolver.train()# *****END OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****############################################################################### END OF YOUR CODE ###############################################################################

(Iteration 1 / 3920) loss: 2.306114(Epoch 0 / 8) train acc: 0.135000; val_acc: 0.132000(Iteration 101 / 3920) loss: 1.854126(Iteration 201 / 3920) loss: 1.851763(Iteration 301 / 3920) loss: 1.558956(Iteration 401 / 3920) loss: 1.486990(Epoch 1 / 8) train acc: 0.405000; val_acc: 0.411000(Iteration 501 / 3920) loss: 1.460269(Iteration 601 / 3920) loss: 1.411556(Iteration 701 / 3920) loss: 1.595890(Iteration 801 / 3920) loss: 1.524085(Iteration 901 / 3920) loss: 1.226461(Epoch 2 / 8) train acc: 0.518000; val_acc: 0.468000(Iteration 1001 / 3920) loss: 1.603831(Iteration 1101 / 3920) loss: 1.573270(Iteration 1201 / 3920) loss: 1.215742(Iteration 1301 / 3920) loss: 1.298395(Iteration 1401 / 3920) loss: 1.114452(Epoch 3 / 8) train acc: 0.496000; val_acc: 0.463000(Iteration 1501 / 3920) loss: 1.408867(Iteration 1601 / 3920) loss: 1.205041(Iteration 1701 / 3920) loss: 1.304048(Iteration 1801 / 3920) loss: 1.367574(Iteration 1901 / 3920) loss: 1.328690(Epoch 4 / 8) train acc: 0.541000; val_acc: 0.516000(Iteration 2001 / 3920) loss: 1.364002(Iteration 2101 / 3920) loss: 1.263270(Iteration 2201 / 3920) loss: 1.406228(Iteration 2301 / 3920) loss: 1.257448(Iteration 2401 / 3920) loss: 1.387278(Epoch 5 / 8) train acc: 0.557000; val_acc: 0.503000(Iteration 2501 / 3920) loss: 1.292922(Iteration 2601 / 3920) loss: 1.239152(Iteration 2701 / 3920) loss: 1.184182(Iteration 2801 / 3920) loss: 1.409515(Iteration 2901 / 3920) loss: 1.401696(Epoch 6 / 8) train acc: 0.575000; val_acc: 0.514000(Iteration 3001 / 3920) loss: 1.075956(Iteration 3101 / 3920) loss: 1.216456(Iteration 3201 / 3920) loss: 1.179340(Iteration 3301 / 3920) loss: 1.210690(Iteration 3401 / 3920) loss: 1.324619(Epoch 7 / 8) train acc: 0.594000; val_acc: 0.514000(Iteration 3501 / 3920) loss: 1.140519(Iteration 3601 / 3920) loss: 1.198608(Iteration 3701 / 3920) loss: 1.366061(Iteration 3801 / 3920) loss: 1.160887(Iteration 3901 / 3920) loss: 1.180904(Epoch 8 / 8) train acc: 0.589000; val_acc: 0.494000(Iteration 1 / 3920) loss: 1.213504(Epoch 8 / 8) train acc: 0.542000; val_acc: 0.528000(Iteration 101 / 3920) loss: 1.304551(Iteration 201 / 3920) loss: 1.321262(Iteration 301 / 3920) loss: 1.103654(Iteration 401 / 3920) loss: 1.104092(Epoch 9 / 8) train acc: 0.577000; val_acc: 0.517000(Iteration 501 / 3920) loss: 1.059394(Iteration 601 / 3920) loss: 1.501449(Iteration 701 / 3920) loss: 1.020301(Iteration 801 / 3920) loss: 1.098940(Iteration 901 / 3920) loss: 1.140753(Epoch 10 / 8) train acc: 0.606000; val_acc: 0.501000(Iteration 1001 / 3920) loss: 1.257351(Iteration 1101 / 3920) loss: 1.223436(Iteration 1201 / 3920) loss: 1.025524(Iteration 1301 / 3920) loss: 1.236936(Iteration 1401 / 3920) loss: 1.335805(Epoch 11 / 8) train acc: 0.603000; val_acc: 0.530000(Iteration 1501 / 3920) loss: 1.085880(Iteration 1601 / 3920) loss: 1.087423(Iteration 1701 / 3920) loss: 1.122185(Iteration 1801 / 3920) loss: 1.141183(Iteration 1901 / 3920) loss: 1.214175(Epoch 12 / 8) train acc: 0.586000; val_acc: 0.516000(Iteration 2001 / 3920) loss: 1.192277(Iteration 2101 / 3920) loss: 1.228408(Iteration 2201 / 3920) loss: 1.101908(Iteration 2301 / 3920) loss: 1.249030(Iteration 2401 / 3920) loss: 1.371962(Epoch 13 / 8) train acc: 0.617000; val_acc: 0.515000(Iteration 2501 / 3920) loss: 1.084948(Iteration 2601 / 3920) loss: 1.069335(Iteration 2701 / 3920) loss: 1.073005(Iteration 2801 / 3920) loss: 1.306515(Iteration 2901 / 3920) loss: 1.146512(Epoch 14 / 8) train acc: 0.614000; val_acc: 0.530000(Iteration 3001 / 3920) loss: 1.059701(Iteration 3101 / 3920) loss: 0.943784(Iteration 3201 / 3920) loss: 0.933784(Iteration 3301 / 3920) loss: 1.177530(Iteration 3401 / 3920) loss: 1.154607(Epoch 15 / 8) train acc: 0.642000; val_acc: 0.507000(Iteration 3501 / 3920) loss: 1.120586(Iteration 3601 / 3920) loss: 1.299895(Iteration 3701 / 3920) loss: 1.179488(Iteration 3801 / 3920) loss: 1.160027(Iteration 3901 / 3920) loss: 0.992143(Epoch 16 / 8) train acc: 0.634000; val_acc: 0.520000(Iteration 1 / 3920) loss: 1.173236(Epoch 16 / 8) train acc: 0.606000; val_acc: 0.539000(Iteration 101 / 3920) loss: 1.071621(Iteration 201 / 3920) loss: 1.135191(Iteration 301 / 3920) loss: 1.157115(Iteration 401 / 3920) loss: 1.188208(Epoch 17 / 8) train acc: 0.645000; val_acc: 0.542000(Iteration 501 / 3920) loss: 1.027983(Iteration 601 / 3920) loss: 1.071079(Iteration 701 / 3920) loss: 0.776308(Iteration 801 / 3920) loss: 1.178619(Iteration 901 / 3920) loss: 1.101992(Epoch 18 / 8) train acc: 0.575000; val_acc: 0.516000(Iteration 1001 / 3920) loss: 1.229142(Iteration 1101 / 3920) loss: 1.169607(Iteration 1201 / 3920) loss: 1.074905(Iteration 1301 / 3920) loss: 1.094570(Iteration 1401 / 3920) loss: 1.323550(Epoch 19 / 8) train acc: 0.627000; val_acc: 0.527000(Iteration 1501 / 3920) loss: 1.096040(Iteration 1601 / 3920) loss: 1.134923(Iteration 1701 / 3920) loss: 0.980066(Iteration 1801 / 3920) loss: 1.282441(Iteration 1901 / 3920) loss: 1.158977(Epoch 20 / 8) train acc: 0.654000; val_acc: 0.529000(Iteration 2001 / 3920) loss: 1.106014(Iteration 2101 / 3920) loss: 1.045532(Iteration 2201 / 3920) loss: 1.079075(Iteration 2301 / 3920) loss: 1.175802(Iteration 2401 / 3920) loss: 1.010878(Epoch 21 / 8) train acc: 0.656000; val_acc: 0.524000(Iteration 2501 / 3920) loss: 0.912243(Iteration 2601 / 3920) loss: 1.124658(Iteration 2701 / 3920) loss: 1.019369(Iteration 2801 / 3920) loss: 0.986130(Iteration 2901 / 3920) loss: 0.986593(Epoch 22 / 8) train acc: 0.657000; val_acc: 0.522000(Iteration 3001 / 3920) loss: 1.019763(Iteration 3101 / 3920) loss: 0.848278(Iteration 3201 / 3920) loss: 1.035108(Iteration 3301 / 3920) loss: 1.027310(Iteration 3401 / 3920) loss: 0.889497(Epoch 23 / 8) train acc: 0.664000; val_acc: 0.527000(Iteration 3501 / 3920) loss: 0.952756(Iteration 3601 / 3920) loss: 0.706521(Iteration 3701 / 3920) loss: 1.074842(Iteration 3801 / 3920) loss: 1.284972(Iteration 3901 / 3920) loss: 1.129590(Epoch 24 / 8) train acc: 0.651000; val_acc: 0.546000(Iteration 1 / 3920) loss: 1.119762(Epoch 24 / 8) train acc: 0.643000; val_acc: 0.540000(Iteration 101 / 3920) loss: 1.043755(Iteration 201 / 3920) loss: 1.003167(Iteration 301 / 3920) loss: 0.999443(Iteration 401 / 3920) loss: 1.155654(Epoch 25 / 8) train acc: 0.654000; val_acc: 0.530000(Iteration 501 / 3920) loss: 0.780462(Iteration 601 / 3920) loss: 1.008519(Iteration 701 / 3920) loss: 0.937963(Iteration 801 / 3920) loss: 1.158664(Iteration 901 / 3920) loss: 1.168604(Epoch 26 / 8) train acc: 0.685000; val_acc: 0.545000(Iteration 1001 / 3920) loss: 1.139831(Iteration 1101 / 3920) loss: 0.884459(Iteration 1201 / 3920) loss: 0.934558(Iteration 1301 / 3920) loss: 0.972650(Iteration 1401 / 3920) loss: 0.946480(Epoch 27 / 8) train acc: 0.672000; val_acc: 0.531000(Iteration 1501 / 3920) loss: 0.806164(Iteration 1601 / 3920) loss: 0.869970(Iteration 1701 / 3920) loss: 0.877202(Iteration 1801 / 3920) loss: 0.859036(Iteration 1901 / 3920) loss: 0.829787(Epoch 28 / 8) train acc: 0.682000; val_acc: 0.538000(Iteration 2001 / 3920) loss: 0.888814(Iteration 2101 / 3920) loss: 0.978453(Iteration 2201 / 3920) loss: 0.936475(Iteration 2301 / 3920) loss: 0.711255(Iteration 2401 / 3920) loss: 0.983541(Epoch 29 / 8) train acc: 0.707000; val_acc: 0.532000(Iteration 2501 / 3920) loss: 1.164406(Iteration 2601 / 3920) loss: 0.810918(Iteration 2701 / 3920) loss: 0.941060(Iteration 2801 / 3920) loss: 0.955967(Iteration 2901 / 3920) loss: 0.882876(Epoch 30 / 8) train acc: 0.691000; val_acc: 0.527000(Iteration 3001 / 3920) loss: 0.831312(Iteration 3101 / 3920) loss: 0.855202(Iteration 3201 / 3920) loss: 0.888598(Iteration 3301 / 3920) loss: 0.757917(Iteration 3401 / 3920) loss: 0.823209(Epoch 31 / 8) train acc: 0.689000; val_acc: 0.527000(Iteration 3501 / 3920) loss: 0.747915(Iteration 3601 / 3920) loss: 0.829295(Iteration 3701 / 3920) loss: 0.822353(Iteration 3801 / 3920) loss: 0.800108(Iteration 3901 / 3920) loss: 0.934793(Epoch 32 / 8) train acc: 0.688000; val_acc: 0.509000(Iteration 1 / 3920) loss: 0.851312(Epoch 32 / 8) train acc: 0.714000; val_acc: 0.518000(Iteration 101 / 3920) loss: 0.791078(Iteration 201 / 3920) loss: 0.895301(Iteration 301 / 3920) loss: 0.965742(Iteration 401 / 3920) loss: 0.619245(Epoch 33 / 8) train acc: 0.690000; val_acc: 0.512000(Iteration 501 / 3920) loss: 0.773794(Iteration 601 / 3920) loss: 0.905245(Iteration 701 / 3920) loss: 0.827477(Iteration 801 / 3920) loss: 0.780678(Iteration 901 / 3920) loss: 0.705739(Epoch 34 / 8) train acc: 0.693000; val_acc: 0.539000(Iteration 1001 / 3920) loss: 0.900693(Iteration 1101 / 3920) loss: 1.042272(Iteration 1201 / 3920) loss: 0.930219(Iteration 1301 / 3920) loss: 0.846260(Iteration 1401 / 3920) loss: 0.833534(Epoch 35 / 8) train acc: 0.713000; val_acc: 0.508000(Iteration 1501 / 3920) loss: 0.589478(Iteration 1601 / 3920) loss: 0.883821(Iteration 1701 / 3920) loss: 0.832119(Iteration 1801 / 3920) loss: 0.787544(Iteration 1901 / 3920) loss: 0.815191(Epoch 36 / 8) train acc: 0.763000; val_acc: 0.533000(Iteration 2001 / 3920) loss: 0.937591(Iteration 2101 / 3920) loss: 0.716356(Iteration 2201 / 3920) loss: 0.862611(Iteration 2301 / 3920) loss: 1.027764(Iteration 2401 / 3920) loss: 0.863654(Epoch 37 / 8) train acc: 0.719000; val_acc: 0.520000(Iteration 2501 / 3920) loss: 1.029389(Iteration 2601 / 3920) loss: 0.598151(Iteration 2701 / 3920) loss: 0.691719(Iteration 2801 / 3920) loss: 0.831859(Iteration 2901 / 3920) loss: 0.838723(Epoch 38 / 8) train acc: 0.725000; val_acc: 0.527000(Iteration 3001 / 3920) loss: 0.868424(Iteration 3101 / 3920) loss: 0.838250(Iteration 3201 / 3920) loss: 0.823848(Iteration 3301 / 3920) loss: 0.726566(Iteration 3401 / 3920) loss: 0.705295(Epoch 39 / 8) train acc: 0.735000; val_acc: 0.512000(Iteration 3501 / 3920) loss: 0.743577(Iteration 3601 / 3920) loss: 0.792176(Iteration 3701 / 3920) loss: 0.697288(Iteration 3801 / 3920) loss: 0.675618(Iteration 3901 / 3920) loss: 0.865431(Epoch 40 / 8) train acc: 0.720000; val_acc: 0.517000(Iteration 1 / 3920) loss: 0.821200(Epoch 40 / 8) train acc: 0.739000; val_acc: 0.521000(Iteration 101 / 3920) loss: 0.897865(Iteration 201 / 3920) loss: 0.785206(Iteration 301 / 3920) loss: 0.708041(Iteration 401 / 3920) loss: 0.601831(Epoch 41 / 8) train acc: 0.741000; val_acc: 0.522000(Iteration 501 / 3920) loss: 0.682592(Iteration 601 / 3920) loss: 0.872770(Iteration 701 / 3920) loss: 0.804489(Iteration 801 / 3920) loss: 0.791230(Iteration 901 / 3920) loss: 0.701115(Epoch 42 / 8) train acc: 0.741000; val_acc: 0.511000(Iteration 1001 / 3920) loss: 0.668302(Iteration 1101 / 3920) loss: 0.845355(Iteration 1201 / 3920) loss: 0.754407(Iteration 1301 / 3920) loss: 0.681011(Iteration 1401 / 3920) loss: 0.813246(Epoch 43 / 8) train acc: 0.755000; val_acc: 0.525000(Iteration 1501 / 3920) loss: 0.730083(Iteration 1601 / 3920) loss: 0.526022(Iteration 1701 / 3920) loss: 0.905424(Iteration 1801 / 3920) loss: 0.794723(Iteration 1901 / 3920) loss: 0.666263(Epoch 44 / 8) train acc: 0.738000; val_acc: 0.509000(Iteration 2001 / 3920) loss: 0.784094(Iteration 2101 / 3920) loss: 0.953461(Iteration 2201 / 3920) loss: 0.733237(Iteration 2301 / 3920) loss: 0.741551(Iteration 2401 / 3920) loss: 0.809936(Epoch 45 / 8) train acc: 0.746000; val_acc: 0.509000(Iteration 2501 / 3920) loss: 0.706718(Iteration 2601 / 3920) loss: 0.993605(Iteration 2701 / 3920) loss: 0.765338(Iteration 2801 / 3920) loss: 0.792885(Iteration 2901 / 3920) loss: 0.805664(Epoch 46 / 8) train acc: 0.777000; val_acc: 0.532000(Iteration 3001 / 3920) loss: 0.892900(Iteration 3101 / 3920) loss: 0.868157(Iteration 3201 / 3920) loss: 0.666570(Iteration 3301 / 3920) loss: 0.829526(Iteration 3401 / 3920) loss: 0.768001(Epoch 47 / 8) train acc: 0.779000; val_acc: 0.520000(Iteration 3501 / 3920) loss: 0.855577(Iteration 3601 / 3920) loss: 0.750030(Iteration 3701 / 3920) loss: 0.653861(Iteration 3801 / 3920) loss: 0.664095(Iteration 3901 / 3920) loss: 0.828190(Epoch 48 / 8) train acc: 0.735000; val_acc: 0.519000(Iteration 1 / 3920) loss: 0.662947(Epoch 48 / 8) train acc: 0.743000; val_acc: 0.523000(Iteration 101 / 3920) loss: 0.745197(Iteration 201 / 3920) loss: 0.773770(Iteration 301 / 3920) loss: 0.706227(Iteration 401 / 3920) loss: 0.629369(Epoch 49 / 8) train acc: 0.739000; val_acc: 0.516000(Iteration 501 / 3920) loss: 0.662874(Iteration 601 / 3920) loss: 0.753527(Iteration 701 / 3920) loss: 0.904063(Iteration 801 / 3920) loss: 0.599661(Iteration 901 / 3920) loss: 0.793521(Epoch 50 / 8) train acc: 0.770000; val_acc: 0.517000(Iteration 1001 / 3920) loss: 0.671293(Iteration 1101 / 3920) loss: 0.678262(Iteration 1201 / 3920) loss: 0.736108(Iteration 1301 / 3920) loss: 0.639588(Iteration 1401 / 3920) loss: 0.581877(Epoch 51 / 8) train acc: 0.757000; val_acc: 0.525000(Iteration 1501 / 3920) loss: 0.622416(Iteration 1601 / 3920) loss: 0.775816(Iteration 1701 / 3920) loss: 0.719395(Iteration 1801 / 3920) loss: 0.715135(Iteration 1901 / 3920) loss: 0.744467(Epoch 52 / 8) train acc: 0.768000; val_acc: 0.516000(Iteration 2001 / 3920) loss: 0.648257(Iteration 2101 / 3920) loss: 0.570483(Iteration 2201 / 3920) loss: 0.880970(Iteration 2301 / 3920) loss: 0.770496(Iteration 2401 / 3920) loss: 0.797153(Epoch 53 / 8) train acc: 0.744000; val_acc: 0.521000(Iteration 2501 / 3920) loss: 0.669477(Iteration 2601 / 3920) loss: 0.715992(Iteration 2701 / 3920) loss: 0.793173(Iteration 2801 / 3920) loss: 0.668304(Iteration 2901 / 3920) loss: 0.694873(Epoch 54 / 8) train acc: 0.770000; val_acc: 0.511000(Iteration 3001 / 3920) loss: 0.686666(Iteration 3101 / 3920) loss: 0.663038(Iteration 3201 / 3920) loss: 0.749805(Iteration 3301 / 3920) loss: 0.757684(Iteration 3401 / 3920) loss: 0.675762(Epoch 55 / 8) train acc: 0.771000; val_acc: 0.514000(Iteration 3501 / 3920) loss: 0.614942(Iteration 3601 / 3920) loss: 0.670961(Iteration 3701 / 3920) loss: 0.649142(Iteration 3801 / 3920) loss: 0.605455(Iteration 3901 / 3920) loss: 0.730182(Epoch 56 / 8) train acc: 0.789000; val_acc: 0.524000(Iteration 1 / 3920) loss: 0.614868(Epoch 56 / 8) train acc: 0.783000; val_acc: 0.523000(Iteration 101 / 3920) loss: 0.808440(Iteration 201 / 3920) loss: 0.698936(Iteration 301 / 3920) loss: 0.702807(Iteration 401 / 3920) loss: 0.654820(Epoch 57 / 8) train acc: 0.771000; val_acc: 0.511000(Iteration 501 / 3920) loss: 0.573736(Iteration 601 / 3920) loss: 0.788944(Iteration 701 / 3920) loss: 0.676950(Iteration 801 / 3920) loss: 0.647669(Iteration 901 / 3920) loss: 0.651173(Epoch 58 / 8) train acc: 0.768000; val_acc: 0.527000(Iteration 1001 / 3920) loss: 0.876323(Iteration 1101 / 3920) loss: 0.639902(Iteration 1201 / 3920) loss: 0.645207(Iteration 1301 / 3920) loss: 0.571653(Iteration 1401 / 3920) loss: 0.793754(Epoch 59 / 8) train acc: 0.767000; val_acc: 0.505000(Iteration 1501 / 3920) loss: 0.670442(Iteration 1601 / 3920) loss: 0.600570(Iteration 1701 / 3920) loss: 0.826705(Iteration 1801 / 3920) loss: 0.696892(Iteration 1901 / 3920) loss: 0.713566(Epoch 60 / 8) train acc: 0.782000; val_acc: 0.508000(Iteration 2001 / 3920) loss: 0.818164(Iteration 2101 / 3920) loss: 0.814385(Iteration 2201 / 3920) loss: 0.790078(Iteration 2301 / 3920) loss: 0.807461(Iteration 2401 / 3920) loss: 0.791152(Epoch 61 / 8) train acc: 0.808000; val_acc: 0.514000(Iteration 2501 / 3920) loss: 0.658992(Iteration 2601 / 3920) loss: 0.732733(Iteration 2701 / 3920) loss: 0.703825(Iteration 2801 / 3920) loss: 0.736217(Iteration 2901 / 3920) loss: 0.749870(Epoch 62 / 8) train acc: 0.789000; val_acc: 0.513000(Iteration 3001 / 3920) loss: 0.598580(Iteration 3101 / 3920) loss: 0.586201(Iteration 3201 / 3920) loss: 0.649068(Iteration 3301 / 3920) loss: 0.662564(Iteration 3401 / 3920) loss: 0.684387(Epoch 63 / 8) train acc: 0.787000; val_acc: 0.518000(Iteration 3501 / 3920) loss: 0.576055(Iteration 3601 / 3920) loss: 0.656433(Iteration 3701 / 3920) loss: 0.774953(Iteration 3801 / 3920) loss: 0.597454(Iteration 3901 / 3920) loss: 0.559453(Epoch 64 / 8) train acc: 0.769000; val_acc: 0.513000

# Run this cell to visualize training loss and train / val accuracyplt.subplot(2, 1, 1)plt.title('Training loss')plt.plot(solver.loss_history, 'o')plt.xlabel('Iteration')plt.subplot(2, 1, 2)plt.title('Accuracy')plt.plot(solver.train_acc_history, '-o', label='train')plt.plot(solver.val_acc_history, '-o', label='val')plt.plot([0.5] * len(solver.val_acc_history), 'k--')plt.xlabel('Epoch')plt.legend(loc='lower right')plt.gcf().set_size_inches(15, 12)plt.show()

Multilayer network

Next you will implement a fully-connected network with an arbitrary number of hidden layers.

Read through the FullyConnectedNet class in the file cs231n/classifiers/fc_net.py.

Implement the initialization, the forward pass, and the backward pass. For the moment don’t worry about implementing dropout or batch/layer normalization; we will add those features soon.

Initial loss and gradient check

As a sanity check, run the following to check the initial loss and to gradient check the network both with and without regularization. Do the initial losses seem reasonable?

For gradient checking, you should expect to see errors around 1e-7 or less.

np.random.seed(231)N, D, H1, H2, C = 2, 15, 20, 30, 10X = np.random.randn(N, D)y = np.random.randint(C, size=(N,))for reg in [0, 3.14]:print('Running check with reg = ', reg)model = FullyConnectedNet([H1, H2], input_dim=D, num_classes=C,reg=reg, weight_scale=5e-2, dtype=np.float64)loss, grads = model.loss(X, y)print('Initial loss: ', loss)# Most of the errors should be on the order of e-7 or smaller.# NOTE: It is fine however to see an error for W2 on the order of e-5# for the check when reg = 0.0for name in sorted(grads):f = lambda _: model.loss(X, y)[0]grad_num = eval_numerical_gradient(f, model.params[name], verbose=False, h=1e-5)print('%s relative error: %.2e' % (name, rel_error(grad_num, grads[name])))

Running check with reg = 0Initial loss: 2.3004790897684924W1 relative error: 1.48e-07W2 relative error: 2.21e-05W3 relative error: 3.53e-07b1 relative error: 5.38e-09b2 relative error: 2.09e-09b3 relative error: 5.80e-11Running check with reg = 3.14Initial loss: 7.052114776533016W1 relative error: 3.90e-09W2 relative error: 6.87e-08W3 relative error: 2.13e-08b1 relative error: 1.48e-08b2 relative error: 1.72e-09b3 relative error: 1.57e-10

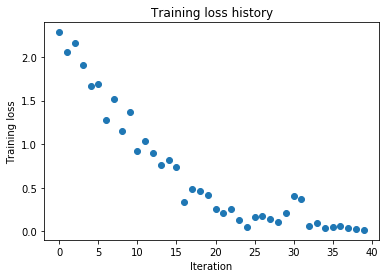

As another sanity check, make sure you can overfit a small dataset of 50 images. First we will try a three-layer network with 100 units in each hidden layer. In the following cell, tweak the learning rate and weight initialization scale to overfit and achieve 100% training accuracy within 20 epochs.

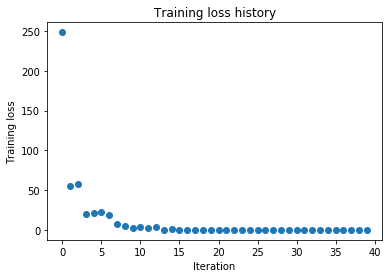

%%time# TODO: Use a three-layer Net to overfit 50 training examples by# tweaking just the learning rate and initialization scale.num_train = 50small_data = {'X_train': data['X_train'][:num_train],'y_train': data['y_train'][:num_train],'X_val': data['X_val'],'y_val': data['y_val'],}weight_scale = 1e-2 # Experiment with this!learning_rate = 1e-2 # Experiment with this!model = FullyConnectedNet([100, 100],weight_scale=weight_scale, dtype=np.float64)solver = Solver(model, small_data,print_every=10, num_epochs=20, batch_size=25,update_rule='sgd',optim_config={'learning_rate': learning_rate,})solver.train()'''### ways to find best lr & wslrs = np.logspace(-6,-1,6)wss = np.logspace(-6,-1,6)methods = []for ws in wss:solver.model.weight_scale = wsfor lr in lrs:solver.optim_config['learning_rate'] = lrsolver.train()methods.append({'lr':lr,'ws':ws,'acc':solver.best_val_acc,})print('learning_rate\tweight_scale\taccuracy')for item in sorted(methods,key=lambda x:x['acc'],reverse=True):print("{0:4e},\t{1:4e},\t{2:4f}".format(item['lr'],item['ws'],item['acc']))'''plt.plot(solver.loss_history, 'o')plt.title('Training loss history')plt.xlabel('Iteration')plt.ylabel('Training loss')plt.show()

(Iteration 1 / 40) loss: 2.283204(Epoch 0 / 20) train acc: 0.380000; val_acc: 0.140000(Epoch 1 / 20) train acc: 0.280000; val_acc: 0.134000(Epoch 2 / 20) train acc: 0.500000; val_acc: 0.163000(Epoch 3 / 20) train acc: 0.660000; val_acc: 0.166000(Epoch 4 / 20) train acc: 0.700000; val_acc: 0.168000(Epoch 5 / 20) train acc: 0.820000; val_acc: 0.182000(Iteration 11 / 40) loss: 0.922495(Epoch 6 / 20) train acc: 0.620000; val_acc: 0.141000(Epoch 7 / 20) train acc: 0.780000; val_acc: 0.181000(Epoch 8 / 20) train acc: 0.800000; val_acc: 0.158000(Epoch 9 / 20) train acc: 0.880000; val_acc: 0.173000(Epoch 10 / 20) train acc: 0.940000; val_acc: 0.178000(Iteration 21 / 40) loss: 0.259975(Epoch 11 / 20) train acc: 0.960000; val_acc: 0.188000(Epoch 12 / 20) train acc: 0.960000; val_acc: 0.178000(Epoch 13 / 20) train acc: 0.980000; val_acc: 0.181000(Epoch 14 / 20) train acc: 0.980000; val_acc: 0.179000(Epoch 15 / 20) train acc: 0.940000; val_acc: 0.193000(Iteration 31 / 40) loss: 0.402781(Epoch 16 / 20) train acc: 0.980000; val_acc: 0.206000(Epoch 17 / 20) train acc: 1.000000; val_acc: 0.198000(Epoch 18 / 20) train acc: 1.000000; val_acc: 0.198000(Epoch 19 / 20) train acc: 1.000000; val_acc: 0.193000(Epoch 20 / 20) train acc: 1.000000; val_acc: 0.191000

Wall time: 1.65 s

Now try to use a five-layer network with 100 units on each layer to overfit 50 training examples. Again, you will have to adjust the learning rate and weight initialization scale, but you should be able to achieve 100% training accuracy within 20 epochs.

%%time# TODO: Use a five-layer Net to overfit 50 training examples by# tweaking just the learning rate and initialization scale.num_train = 50small_data = {'X_train': data['X_train'][:num_train],'y_train': data['y_train'][:num_train],'X_val': data['X_val'],'y_val': data['y_val'],}learning_rate = 1e-3weight_scale = 1e-1model = FullyConnectedNet([100, 100, 100, 100],weight_scale=weight_scale, dtype=np.float64)solver = Solver(model, small_data,print_every=10, num_epochs=20, batch_size=25,update_rule='sgd',optim_config={'learning_rate': learning_rate,})solver.train()'''ways to find best (lr,ws) (1e-3,1e-1)plt.plot(solver.loss_history, 'o')plt.title('Training loss history')plt.xlabel('Iteration')plt.ylabel('Training loss')plt.show()lrs = np.logspace(-3,-1,3)wss = np.logspace(-3,-1,3)# lrs = [1e-3,1e-2]# wss = [1e-3,1e-2]methods = []for ws in wss:model = FullyConnectedNet([100, 100, 100, 100],weight_scale=ws, dtype=np.float64)for lr in lrs:solver = Solver(model, small_data,print_every=50, num_epochs=20, batch_size=25,update_rule='sgd',optim_config={'learning_rate': lr,})solver.train()methods.append({'lr':lr,'ws':ws,'acc':solver.be,})print('-----------end of one training----------')print('learning_rate\tweight_scale\taccuracy')for item in sorted(methods,key=lambda x:x['acc'],reverse=True):print("{0:4e},\t{1:4e},\t{2:4f}".format(item['lr'],item['ws'],item['acc']))'''plt.plot(solver.loss_history, 'o')plt.title('Training loss history')plt.xlabel('Iteration')plt.ylabel('Training loss')plt.show()

(Iteration 1 / 40) loss: 248.434438(Epoch 0 / 20) train acc: 0.260000; val_acc: 0.112000(Epoch 1 / 20) train acc: 0.160000; val_acc: 0.107000(Epoch 2 / 20) train acc: 0.540000; val_acc: 0.107000(Epoch 3 / 20) train acc: 0.420000; val_acc: 0.099000(Epoch 4 / 20) train acc: 0.740000; val_acc: 0.153000(Epoch 5 / 20) train acc: 0.820000; val_acc: 0.143000(Iteration 11 / 40) loss: 3.960893(Epoch 6 / 20) train acc: 0.860000; val_acc: 0.131000(Epoch 7 / 20) train acc: 0.880000; val_acc: 0.147000(Epoch 8 / 20) train acc: 0.980000; val_acc: 0.141000(Epoch 9 / 20) train acc: 0.940000; val_acc: 0.139000(Epoch 10 / 20) train acc: 1.000000; val_acc: 0.149000(Iteration 21 / 40) loss: 0.001239(Epoch 11 / 20) train acc: 1.000000; val_acc: 0.149000(Epoch 12 / 20) train acc: 1.000000; val_acc: 0.149000(Epoch 13 / 20) train acc: 1.000000; val_acc: 0.149000(Epoch 14 / 20) train acc: 1.000000; val_acc: 0.149000(Epoch 15 / 20) train acc: 1.000000; val_acc: 0.149000(Iteration 31 / 40) loss: 0.000339(Epoch 16 / 20) train acc: 1.000000; val_acc: 0.149000(Epoch 17 / 20) train acc: 1.000000; val_acc: 0.148000(Epoch 18 / 20) train acc: 1.000000; val_acc: 0.148000(Epoch 19 / 20) train acc: 1.000000; val_acc: 0.148000(Epoch 20 / 20) train acc: 1.000000; val_acc: 0.148000

Wall time: 1.56 s

Inline Question 2:

Did you notice anything about the comparative difficulty of training the three-layer net vs training the five layer net? In particular, based on your experience, which network seemed more sensitive to the initialization scale? Why do you think that is the case?

Answer:

The deeper network is, the more sensitive it is to the initialization scale.

Update rules

So far we have used vanilla stochastic gradient descent (SGD) as our update rule. More sophisticated update rules can make it easier to train deep networks. We will implement a few of the most commonly used update rules and compare them to vanilla SGD.

SGD+Momentum

Stochastic gradient descent with momentum is a widely used update rule that tends to make deep networks converge faster than vanilla stochastic gradient descent. See the Momentum Update section at http://cs231n.github.io/neural-networks-3/#sgd for more information.

Open the file cs231n/optim.py and read the documentation at the top of the file to make sure you understand the API. Implement the SGD+momentum update rule in the function sgd_momentum and run the following to check your implementation. You should see errors less than e-8.

from cs231n.optim import sgd_momentumN, D = 4, 5w = np.linspace(-0.4, 0.6, num=N*D).reshape(N, D)dw = np.linspace(-0.6, 0.4, num=N*D).reshape(N, D)v = np.linspace(0.6, 0.9, num=N*D).reshape(N, D)config = {'learning_rate': 1e-3, 'velocity': v}next_w, _ = sgd_momentum(w, dw, config=config)expected_next_w = np.asarray([[ 0.1406, 0.20738947, 0.27417895, 0.34096842, 0.40775789],[ 0.47454737, 0.54133684, 0.60812632, 0.67491579, 0.74170526],[ 0.80849474, 0.87528421, 0.94207368, 1.00886316, 1.07565263],[ 1.14244211, 1.20923158, 1.27602105, 1.34281053, 1.4096 ]])expected_velocity = np.asarray([[ 0.5406, 0.55475789, 0.56891579, 0.58307368, 0.59723158],[ 0.61138947, 0.62554737, 0.63970526, 0.65386316, 0.66802105],[ 0.68217895, 0.69633684, 0.71049474, 0.72465263, 0.73881053],[ 0.75296842, 0.76712632, 0.78128421, 0.79544211, 0.8096 ]])# Should see relative errors around e-8 or lessprint('next_w error: ', rel_error(next_w, expected_next_w))print('velocity error: ', rel_error(expected_velocity, config['velocity']))

next_w error: 8.882347033505819e-09velocity error: 4.269287743278663e-09

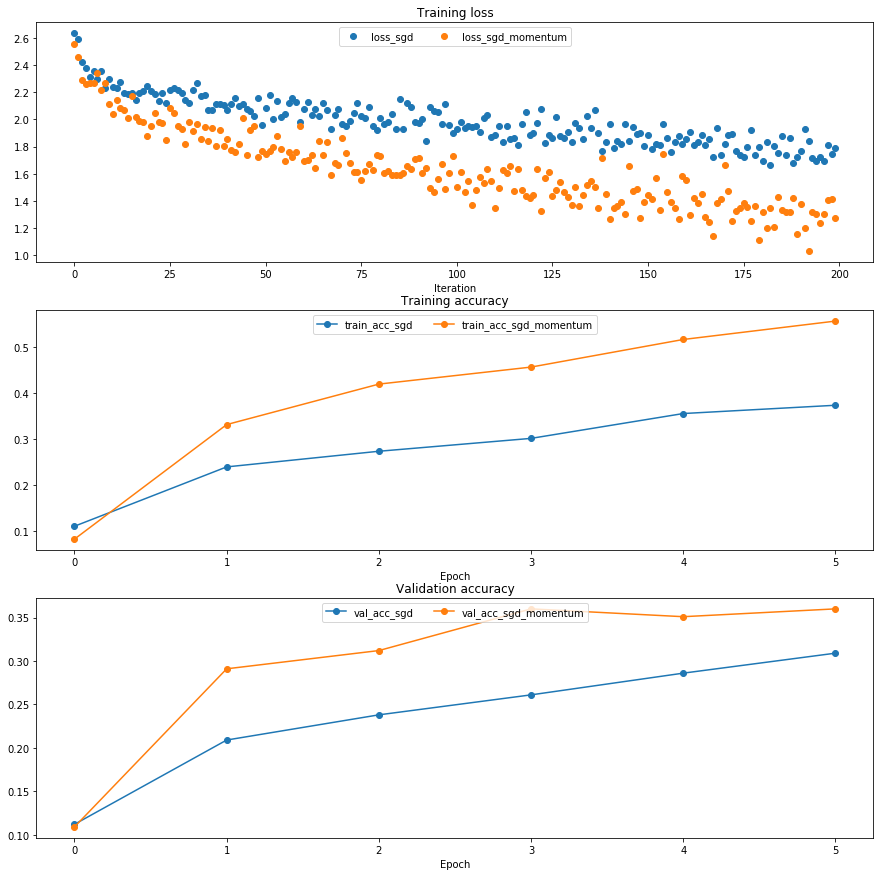

Once you have done so, run the following to train a six-layer network with both SGD and SGD+momentum. You should see the SGD+momentum update rule converge faster.

num_train = 4000small_data = {'X_train': data['X_train'][:num_train],'y_train': data['y_train'][:num_train],'X_val': data['X_val'],'y_val': data['y_val'],}solvers = {}for update_rule in ['sgd', 'sgd_momentum']:print('running with ', update_rule)model = FullyConnectedNet([100, 100, 100, 100, 100], weight_scale=5e-2)solver = Solver(model, small_data,num_epochs=5, batch_size=100,update_rule=update_rule,optim_config={'learning_rate': 5e-3,},verbose=True)solvers[update_rule] = solversolver.train()print()plt.subplot(3, 1, 1)plt.title('Training loss')plt.xlabel('Iteration')plt.subplot(3, 1, 2)plt.title('Training accuracy')plt.xlabel('Epoch')plt.subplot(3, 1, 3)plt.title('Validation accuracy')plt.xlabel('Epoch')for update_rule, solver in solvers.items():plt.subplot(3, 1, 1)plt.plot(solver.loss_history, 'o', label="loss_%s" % update_rule)plt.subplot(3, 1, 2)plt.plot(solver.train_acc_history, '-o', label="train_acc_%s" % update_rule)plt.subplot(3, 1, 3)plt.plot(solver.val_acc_history, '-o', label="val_acc_%s" % update_rule)for i in [1, 2, 3]:plt.subplot(3, 1, i)plt.legend(loc='upper center', ncol=4)plt.gcf().set_size_inches(15, 15)plt.show()

running with sgd(Iteration 1 / 200) loss: 2.633875(Epoch 0 / 5) train acc: 0.111000; val_acc: 0.112000(Iteration 11 / 200) loss: 2.235894(Iteration 21 / 200) loss: 2.206218(Iteration 31 / 200) loss: 2.122974(Epoch 1 / 5) train acc: 0.240000; val_acc: 0.209000(Iteration 41 / 200) loss: 2.071314(Iteration 51 / 200) loss: 2.086420(Iteration 61 / 200) loss: 2.074775(Iteration 71 / 200) loss: 1.968858(Epoch 2 / 5) train acc: 0.274000; val_acc: 0.238000(Iteration 81 / 200) loss: 2.013417(Iteration 91 / 200) loss: 1.974977(Iteration 101 / 200) loss: 1.929298(Iteration 111 / 200) loss: 1.888262(Epoch 3 / 5) train acc: 0.302000; val_acc: 0.261000(Iteration 121 / 200) loss: 1.903221(Iteration 131 / 200) loss: 1.833502(Iteration 141 / 200) loss: 1.966820(Iteration 151 / 200) loss: 1.882028(Epoch 4 / 5) train acc: 0.356000; val_acc: 0.286000(Iteration 161 / 200) loss: 1.854492(Iteration 171 / 200) loss: 1.815712(Iteration 181 / 200) loss: 1.691079(Iteration 191 / 200) loss: 1.764298(Epoch 5 / 5) train acc: 0.374000; val_acc: 0.309000running with sgd_momentum(Iteration 1 / 200) loss: 2.556549(Epoch 0 / 5) train acc: 0.083000; val_acc: 0.109000(Iteration 11 / 200) loss: 2.041460(Iteration 21 / 200) loss: 1.948460(Iteration 31 / 200) loss: 1.980526(Epoch 1 / 5) train acc: 0.332000; val_acc: 0.291000(Iteration 41 / 200) loss: 1.855131(Iteration 51 / 200) loss: 1.744686(Iteration 61 / 200) loss: 1.691368(Iteration 71 / 200) loss: 1.864358(Epoch 2 / 5) train acc: 0.420000; val_acc: 0.312000(Iteration 81 / 200) loss: 1.732355(Iteration 91 / 200) loss: 1.713076(Iteration 101 / 200) loss: 1.500155(Iteration 111 / 200) loss: 1.349369(Epoch 3 / 5) train acc: 0.457000; val_acc: 0.360000(Iteration 121 / 200) loss: 1.446921(Iteration 131 / 200) loss: 1.367205(Iteration 141 / 200) loss: 1.270960(Iteration 151 / 200) loss: 1.446620(Epoch 4 / 5) train acc: 0.517000; val_acc: 0.351000(Iteration 161 / 200) loss: 1.556725(Iteration 171 / 200) loss: 1.667454(Iteration 181 / 200) loss: 1.317438(Iteration 191 / 200) loss: 1.380900(Epoch 5 / 5) train acc: 0.557000; val_acc: 0.360000d:\office\office\python\python3.6.2\lib\site-packages\matplotlib\cbook\deprecation.py:107: MatplotlibDeprecationWarning: Adding an axes using the same arguments as a previous axes currently reuses the earlier instance. In a future version, a new instance will always be created and returned. Meanwhile, this warning can be suppressed, and the future behavior ensured, by passing a unique label to each axes instance.warnings.warn(message, mplDeprecation, stacklevel=1)

RMSProp and Adam

RMSProp [1] and Adam [2] are update rules that set per-parameter learning rates by using a running average of the second moments of gradients.

In the file cs231n/optim.py, implement the RMSProp update rule in the rmsprop function and implement the Adam update rule in the adam function, and check your implementations using the tests below.

NOTE: Please implement the complete Adam update rule (with the bias correction mechanism), not the first simplified version mentioned in the course notes.

[1] Tijmen Tieleman and Geoffrey Hinton. “Lecture 6.5-rmsprop: Divide the gradient by a running average of its recent magnitude.” COURSERA: Neural Networks for Machine Learning 4 (2012).

[2] Diederik Kingma and Jimmy Ba, “Adam: A Method for Stochastic Optimization”, ICLR 2015.

# Test RMSProp implementationfrom cs231n.optim import rmspropN, D = 4, 5w = np.linspace(-0.4, 0.6, num=N*D).reshape(N, D)dw = np.linspace(-0.6, 0.4, num=N*D).reshape(N, D)cache = np.linspace(0.6, 0.9, num=N*D).reshape(N, D)config = {'learning_rate': 1e-2, 'cache': cache}next_w, _ = rmsprop(w, dw, config=config)expected_next_w = np.asarray([[-0.39223849, -0.34037513, -0.28849239, -0.23659121, -0.18467247],[-0.132737, -0.08078555, -0.02881884, 0.02316247, 0.07515774],[ 0.12716641, 0.17918792, 0.23122175, 0.28326742, 0.33532447],[ 0.38739248, 0.43947102, 0.49155973, 0.54365823, 0.59576619]])expected_cache = np.asarray([[ 0.5976, 0.6126277, 0.6277108, 0.64284931, 0.65804321],[ 0.67329252, 0.68859723, 0.70395734, 0.71937285, 0.73484377],[ 0.75037008, 0.7659518, 0.78158892, 0.79728144, 0.81302936],[ 0.82883269, 0.84469141, 0.86060554, 0.87657507, 0.8926 ]])# You should see relative errors around e-7 or lessprint('next_w error: ', rel_error(expected_next_w, next_w))print('cache error: ', rel_error(expected_cache, config['cache']))

next_w error: 9.524687511038133e-08cache error: 2.6477955807156126e-09

# Test Adam implementationfrom cs231n.optim import adamN, D = 4, 5w = np.linspace(-0.4, 0.6, num=N*D).reshape(N, D)dw = np.linspace(-0.6, 0.4, num=N*D).reshape(N, D)m = np.linspace(0.6, 0.9, num=N*D).reshape(N, D)v = np.linspace(0.7, 0.5, num=N*D).reshape(N, D)config = {'learning_rate': 1e-2, 'm': m, 'v': v, 't': 5}next_w, _ = adam(w, dw, config=config)expected_next_w = np.asarray([[-0.40094747, -0.34836187, -0.29577703, -0.24319299, -0.19060977],[-0.1380274, -0.08544591, -0.03286534, 0.01971428, 0.0722929],[ 0.1248705, 0.17744702, 0.23002243, 0.28259667, 0.33516969],[ 0.38774145, 0.44031188, 0.49288093, 0.54544852, 0.59801459]])expected_v = np.asarray([[ 0.69966, 0.68908382, 0.67851319, 0.66794809, 0.65738853,],[ 0.64683452, 0.63628604, 0.6257431, 0.61520571, 0.60467385,],[ 0.59414753, 0.58362676, 0.57311152, 0.56260183, 0.55209767,],[ 0.54159906, 0.53110598, 0.52061845, 0.51013645, 0.49966, ]])expected_m = np.asarray([[ 0.48, 0.49947368, 0.51894737, 0.53842105, 0.55789474],[ 0.57736842, 0.59684211, 0.61631579, 0.63578947, 0.65526316],[ 0.67473684, 0.69421053, 0.71368421, 0.73315789, 0.75263158],[ 0.77210526, 0.79157895, 0.81105263, 0.83052632, 0.85 ]])# You should see relative errors around e-7 or lessprint('next_w error: ', rel_error(expected_next_w, next_w))print('v error: ', rel_error(expected_v, config['v']))print('m error: ', rel_error(expected_m, config['m']))

next_w error: 0.20720703668629928v error: 4.208314038113071e-09m error: 4.214963193114416e-09

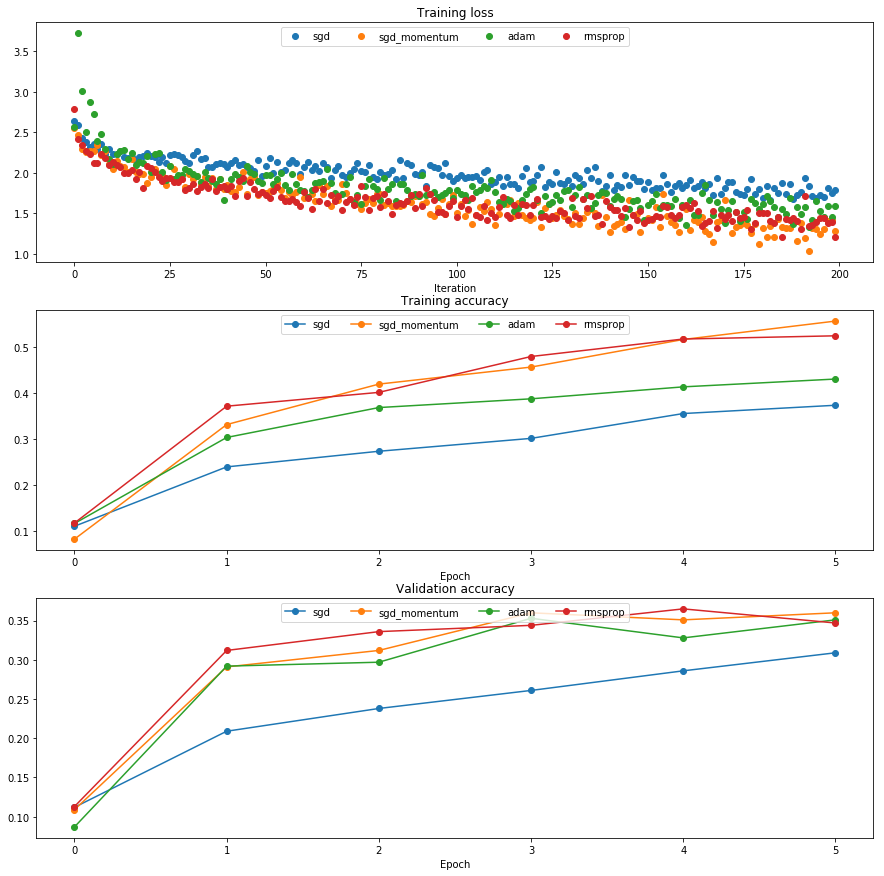

Once you have debugged your RMSProp and Adam implementations, run the following to train a pair of deep networks using these new update rules:

learning_rates = {'rmsprop': 1e-4, 'adam': 1e-3}for update_rule in ['adam', 'rmsprop']:print('running with ', update_rule)model = FullyConnectedNet([100, 100, 100, 100, 100], weight_scale=5e-2)solver = Solver(model, small_data,num_epochs=5, batch_size=100,update_rule=update_rule,optim_config={'learning_rate': learning_rates[update_rule]},verbose=True)solvers[update_rule] = solversolver.train()print()plt.subplot(3, 1, 1)plt.title('Training loss')plt.xlabel('Iteration')plt.subplot(3, 1, 2)plt.title('Training accuracy')plt.xlabel('Epoch')plt.subplot(3, 1, 3)plt.title('Validation accuracy')plt.xlabel('Epoch')for update_rule, solver in list(solvers.items()):plt.subplot(3, 1, 1)plt.plot(solver.loss_history, 'o', label=update_rule)plt.subplot(3, 1, 2)plt.plot(solver.train_acc_history, '-o', label=update_rule)plt.subplot(3, 1, 3)plt.plot(solver.val_acc_history, '-o', label=update_rule)for i in [1, 2, 3]:plt.subplot(3, 1, i)plt.legend(loc='upper center', ncol=4)plt.gcf().set_size_inches(15, 15)plt.show()

running with adam(Iteration 1 / 200) loss: 2.565073(Epoch 0 / 5) train acc: 0.117000; val_acc: 0.087000(Iteration 11 / 200) loss: 2.103384(Iteration 21 / 200) loss: 2.008961(Iteration 31 / 200) loss: 2.016690(Epoch 1 / 5) train acc: 0.304000; val_acc: 0.292000(Iteration 41 / 200) loss: 1.795322(Iteration 51 / 200) loss: 1.976308(Iteration 61 / 200) loss: 1.770154(Iteration 71 / 200) loss: 1.742921(Epoch 2 / 5) train acc: 0.369000; val_acc: 0.297000(Iteration 81 / 200) loss: 1.668088(Iteration 91 / 200) loss: 1.718752(Iteration 101 / 200) loss: 1.785251(Iteration 111 / 200) loss: 1.758603(Epoch 3 / 5) train acc: 0.388000; val_acc: 0.353000(Iteration 121 / 200) loss: 1.824496(Iteration 131 / 200) loss: 1.573791(Iteration 141 / 200) loss: 1.586312(Iteration 151 / 200) loss: 1.600374(Epoch 4 / 5) train acc: 0.414000; val_acc: 0.328000(Iteration 161 / 200) loss: 1.351332(Iteration 171 / 200) loss: 1.379117(Iteration 181 / 200) loss: 1.618205(Iteration 191 / 200) loss: 1.490465(Epoch 5 / 5) train acc: 0.431000; val_acc: 0.351000running with rmsprop(Iteration 1 / 200) loss: 2.789734(Epoch 0 / 5) train acc: 0.118000; val_acc: 0.113000(Iteration 11 / 200) loss: 2.134903(Iteration 21 / 200) loss: 2.058140(Iteration 31 / 200) loss: 1.810528(Epoch 1 / 5) train acc: 0.372000; val_acc: 0.312000(Iteration 41 / 200) loss: 1.795879(Iteration 51 / 200) loss: 1.725097(Iteration 61 / 200) loss: 1.757195(Iteration 71 / 200) loss: 1.537397(Epoch 2 / 5) train acc: 0.402000; val_acc: 0.336000(Iteration 81 / 200) loss: 1.581306(Iteration 91 / 200) loss: 1.743312(Iteration 101 / 200) loss: 1.459270(Iteration 111 / 200) loss: 1.454714(Epoch 3 / 5) train acc: 0.480000; val_acc: 0.344000(Iteration 121 / 200) loss: 1.606346(Iteration 131 / 200) loss: 1.688550(Iteration 141 / 200) loss: 1.579165(Iteration 151 / 200) loss: 1.411453(Epoch 4 / 5) train acc: 0.518000; val_acc: 0.365000(Iteration 161 / 200) loss: 1.607441(Iteration 171 / 200) loss: 1.428779(Iteration 181 / 200) loss: 1.507496(Iteration 191 / 200) loss: 1.309462(Epoch 5 / 5) train acc: 0.525000; val_acc: 0.347000d:\office\office\python\python3.6.2\lib\site-packages\matplotlib\cbook\deprecation.py:107: MatplotlibDeprecationWarning: Adding an axes using the same arguments as a previous axes currently reuses the earlier instance. In a future version, a new instance will always be created and returned. Meanwhile, this warning can be suppressed, and the future behavior ensured, by passing a unique label to each axes instance.warnings.warn(message, mplDeprecation, stacklevel=1)

Inline Question 3:

AdaGrad, like Adam, is a per-parameter optimization method that uses the following update rule:

cache += dw**2w += - learning_rate * dw / (np.sqrt(cache) + eps)

John notices that when he was training a network with AdaGrad that the updates became very small, and that his network was learning slowly. Using your knowledge of the AdaGrad update rule, why do you think the updates would become very small? Would Adam have the same issue?

Answer:

[FILL THIS IN]

Train a good model!

Train the best fully-connected model that you can on CIFAR-10, storing your best model in the best_model variable. We require you to get at least 50% accuracy on the validation set using a fully-connected net.

If you are careful it should be possible to get accuracies above 55%, but we don’t require it for this part and won’t assign extra credit for doing so. Later in the assignment we will ask you to train the best convolutional network that you can on CIFAR-10, and we would prefer that you spend your effort working on convolutional nets rather than fully-connected nets.

You might find it useful to complete the BatchNormalization.ipynb and Dropout.ipynb notebooks before completing this part, since those techniques can help you train powerful models.

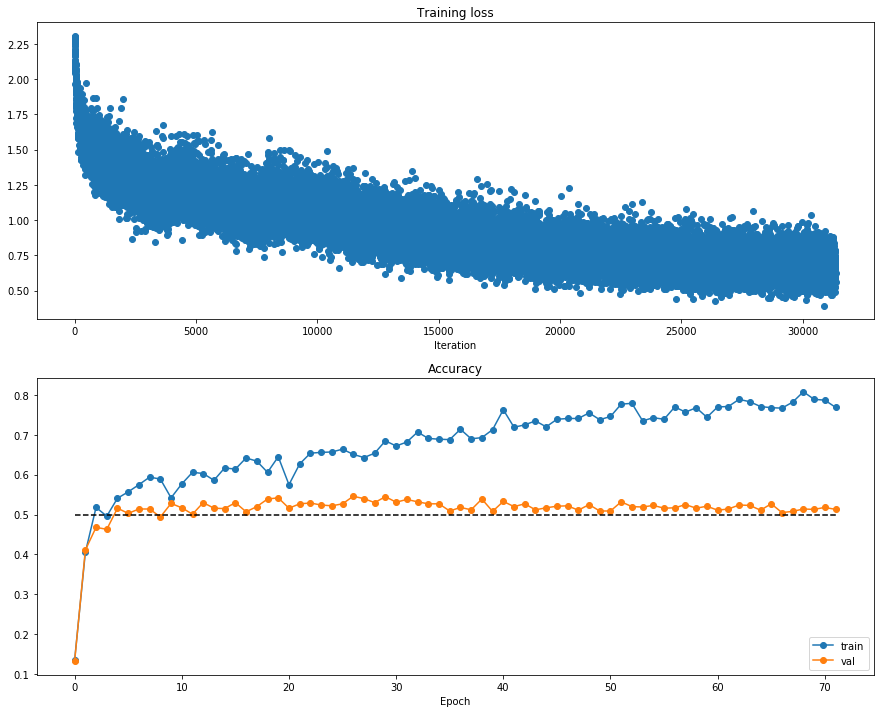

best_model = None################################################################################# TODO: Train the best FullyConnectedNet that you can on CIFAR-10. You might ## find batch/layer normalization and dropout useful. Store your best model in ## the best_model variable. ################################################################################## *****START OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****data = get_CIFAR10_data()weight_scale = 1e-2learning_rate = 1e-3update_rule = 'adam'num_epochs = 20batch_size = 100model = FullyConnectedNet([100, 100, 100, 100],weight_scale=weight_scale, dtype=np.float64)solver = Solver(model, data,print_every=100,num_epochs=num_epochs,batch_size=batch_size,update_rule=update_rule,optim_config={'learning_rate': learning_rate,})solver.train()best_model = solver.model# *****END OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****################################################################################# END OF YOUR CODE #################################################################################

(Iteration 1 / 9800) loss: 2.302519(Epoch 0 / 20) train acc: 0.123000; val_acc: 0.098000(Iteration 101 / 9800) loss: 1.900525(Iteration 201 / 9800) loss: 1.867200(Iteration 301 / 9800) loss: 1.771347(Iteration 401 / 9800) loss: 1.813210(Epoch 1 / 20) train acc: 0.362000; val_acc: 0.381000(Iteration 501 / 9800) loss: 1.869626(Iteration 601 / 9800) loss: 1.722641(Iteration 701 / 9800) loss: 1.514980(Iteration 801 / 9800) loss: 1.572666(Iteration 901 / 9800) loss: 1.539571(Epoch 2 / 20) train acc: 0.446000; val_acc: 0.433000(Iteration 1001 / 9800) loss: 1.402627(Iteration 1101 / 9800) loss: 1.592149(Iteration 1201 / 9800) loss: 1.491804(Iteration 1301 / 9800) loss: 1.510342(Iteration 1401 / 9800) loss: 1.463611(Epoch 3 / 20) train acc: 0.450000; val_acc: 0.457000(Iteration 1501 / 9800) loss: 1.428206(Iteration 1601 / 9800) loss: 1.410774(Iteration 1701 / 9800) loss: 1.419294(Iteration 1801 / 9800) loss: 1.554943(Iteration 1901 / 9800) loss: 1.276085(Epoch 4 / 20) train acc: 0.496000; val_acc: 0.487000(Iteration 2001 / 9800) loss: 1.508825(Iteration 2101 / 9800) loss: 1.514870(Iteration 2201 / 9800) loss: 1.378368(Iteration 2301 / 9800) loss: 1.333612(Iteration 2401 / 9800) loss: 1.369037(Epoch 5 / 20) train acc: 0.516000; val_acc: 0.480000(Iteration 2501 / 9800) loss: 1.569647(Iteration 2601 / 9800) loss: 1.474409(Iteration 2701 / 9800) loss: 1.238740(Iteration 2801 / 9800) loss: 1.469653(Iteration 2901 / 9800) loss: 1.368814(Epoch 6 / 20) train acc: 0.487000; val_acc: 0.502000(Iteration 3001 / 9800) loss: 1.390118(Iteration 3101 / 9800) loss: 1.232272(Iteration 3201 / 9800) loss: 1.059411(Iteration 3301 / 9800) loss: 1.333375(Iteration 3401 / 9800) loss: 1.245856(Epoch 7 / 20) train acc: 0.517000; val_acc: 0.508000(Iteration 3501 / 9800) loss: 1.299509(Iteration 3601 / 9800) loss: 1.515722(Iteration 3701 / 9800) loss: 1.377892(Iteration 3801 / 9800) loss: 1.335273(Iteration 3901 / 9800) loss: 1.499312(Epoch 8 / 20) train acc: 0.551000; val_acc: 0.529000(Iteration 4001 / 9800) loss: 1.377010(Iteration 4101 / 9800) loss: 1.375806(Iteration 4201 / 9800) loss: 1.250878(Iteration 4301 / 9800) loss: 1.326187(Iteration 4401 / 9800) loss: 1.367880(Epoch 9 / 20) train acc: 0.528000; val_acc: 0.504000(Iteration 4501 / 9800) loss: 1.285116(Iteration 4601 / 9800) loss: 1.112254(Iteration 4701 / 9800) loss: 1.201709(Iteration 4801 / 9800) loss: 1.238681(Epoch 10 / 20) train acc: 0.559000; val_acc: 0.521000(Iteration 4901 / 9800) loss: 1.049936(Iteration 5001 / 9800) loss: 1.080508(Iteration 5101 / 9800) loss: 1.202960(Iteration 5201 / 9800) loss: 1.155451(Iteration 5301 / 9800) loss: 1.159336(Epoch 11 / 20) train acc: 0.537000; val_acc: 0.517000(Iteration 5401 / 9800) loss: 1.382031(Iteration 5501 / 9800) loss: 1.111946(Iteration 5601 / 9800) loss: 1.142069(Iteration 5701 / 9800) loss: 1.148606(Iteration 5801 / 9800) loss: 1.178862(Epoch 12 / 20) train acc: 0.582000; val_acc: 0.520000(Iteration 5901 / 9800) loss: 1.290777(Iteration 6001 / 9800) loss: 1.166438(Iteration 6101 / 9800) loss: 1.259738(Iteration 6201 / 9800) loss: 1.120008(Iteration 6301 / 9800) loss: 0.921455(Epoch 13 / 20) train acc: 0.594000; val_acc: 0.504000(Iteration 6401 / 9800) loss: 1.115358(Iteration 6501 / 9800) loss: 1.053265(Iteration 6601 / 9800) loss: 1.208918(Iteration 6701 / 9800) loss: 1.028606(Iteration 6801 / 9800) loss: 1.033682(Epoch 14 / 20) train acc: 0.587000; val_acc: 0.540000(Iteration 6901 / 9800) loss: 1.013524(Iteration 7001 / 9800) loss: 1.187387(Iteration 7101 / 9800) loss: 1.165351(Iteration 7201 / 9800) loss: 1.032104(Iteration 7301 / 9800) loss: 1.008612(Epoch 15 / 20) train acc: 0.615000; val_acc: 0.520000(Iteration 7401 / 9800) loss: 1.158815(Iteration 7501 / 9800) loss: 1.144391(Iteration 7601 / 9800) loss: 0.828153(Iteration 7701 / 9800) loss: 1.156747(Iteration 7801 / 9800) loss: 1.137408(Epoch 16 / 20) train acc: 0.593000; val_acc: 0.514000(Iteration 7901 / 9800) loss: 0.961378(Iteration 8001 / 9800) loss: 1.160585(Iteration 8101 / 9800) loss: 1.067675(Iteration 8201 / 9800) loss: 1.011692(Iteration 8301 / 9800) loss: 1.130812(Epoch 17 / 20) train acc: 0.612000; val_acc: 0.512000(Iteration 8401 / 9800) loss: 1.009954(Iteration 8501 / 9800) loss: 1.316998(Iteration 8601 / 9800) loss: 1.033570(Iteration 8701 / 9800) loss: 1.136633(Iteration 8801 / 9800) loss: 1.166442(Epoch 18 / 20) train acc: 0.590000; val_acc: 0.523000(Iteration 8901 / 9800) loss: 1.185326(Iteration 9001 / 9800) loss: 1.111609(Iteration 9101 / 9800) loss: 1.142837(Iteration 9201 / 9800) loss: 1.153727(Iteration 9301 / 9800) loss: 1.288469(Epoch 19 / 20) train acc: 0.628000; val_acc: 0.526000(Iteration 9401 / 9800) loss: 1.233846(Iteration 9501 / 9800) loss: 0.979883(Iteration 9601 / 9800) loss: 1.080138(Iteration 9701 / 9800) loss: 1.035944(Epoch 20 / 20) train acc: 0.613000; val_acc: 0.520000

Test your model!

Run your best model on the validation and test sets. You should achieve above 50% accuracy on the validation set.

y_test_pred = np.argmax(best_model.loss(data['X_test']), axis=1)y_val_pred = np.argmax(best_model.loss(data['X_val']), axis=1)print('Validation set accuracy: ', (y_val_pred == data['y_val']).mean())print('Test set accuracy: ', (y_test_pred == data['y_test']).mean())

Validation set accuracy: 0.54Test set accuracy: 0.487

!jupyter nbconvert --to markdown FullyConnectedNets.ipynb