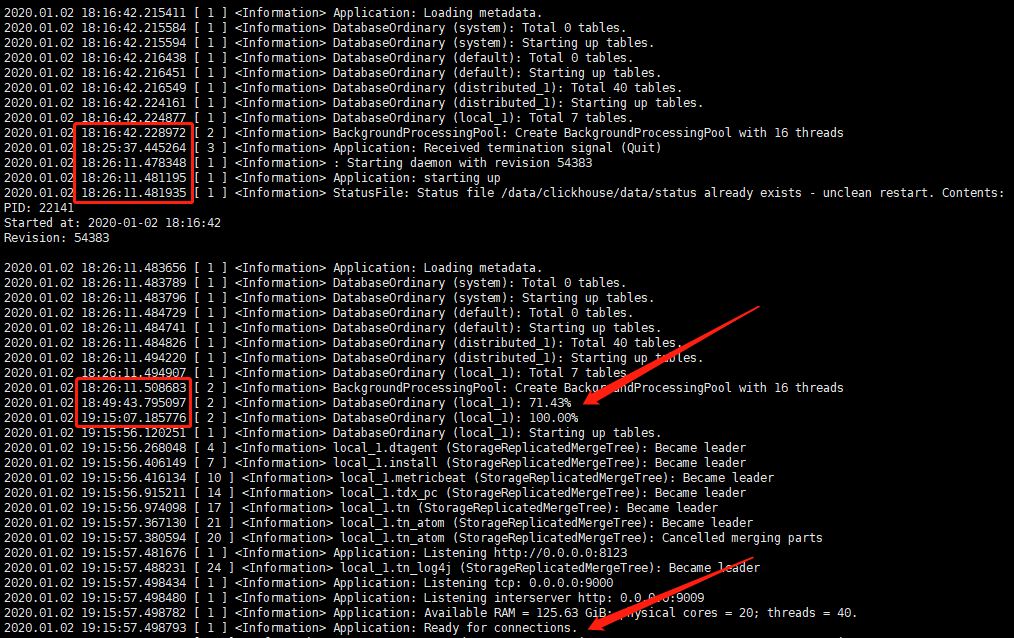

1、启动耗时根据内存参数配置差异,耗时相当长,截图配置为物理10G内存

2、

调整config.xml的参数

[root@localhost conf]# cat config.xml|grep merge -A10<merge_tree><max_suspicious_broken_parts>5</max_suspicious_broken_parts><parts_to_delay_insert>300</parts_to_delay_insert><parts_to_throw_insert>600</parts_to_throw_insert><max_delay_to_insert>2</max_delay_to_insert></merge_tree>

What is the real meaning of parts here?

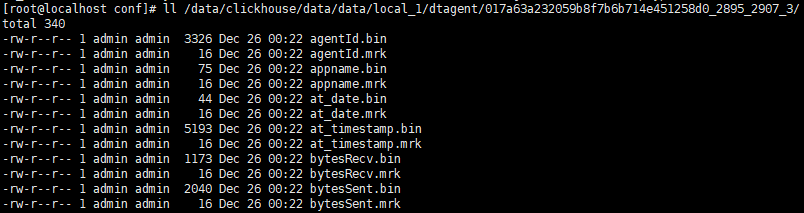

每个INSERT都在/path/to/clickhouse/…/table_name/中创建一个文件夹。在该文件夹内,每列有2个文件,一个包含数据(压缩)的文件,另一个包含索引的文件。数据通过这些文件中的主键进行物理排序。例如:

ClickHouse在后台将较小的parts合并为较大parts。它根据一些规则选择要合并的parts。当合并两个(或多个)parts后,将创建一个更大的parts,并将旧parts排入队列以将其删除。

如果您创建新parts的速度过快(例如,通过执行许多小的插入操作),而ClickHouse无法以适当的速度合并它们,则会抛出异常“合并正在处理”比插入的速度慢。可以尝试增加限制,但可能会出现文件/目录数量过多(如inodes限制)引起的文件系统问题。

3、

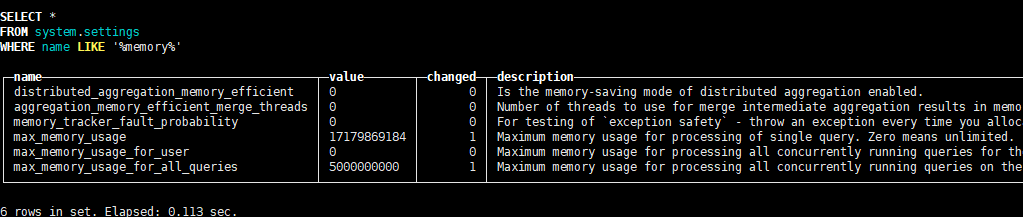

调整users.xml内存参数

<max_memory_usage>17179869184</max_memory_usage><max_memory_usage_for_all_queries>5000000000</max_memory_usage_for_all_queries><max_bytes_before_external_group_by>1000000000</max_bytes_before_external_group_by><max_bytes_before_external_sort>1000000000</max_bytes_before_external_sort>

4、2020.01.10 03:23:05.494331 [ 37403 ] {a0930945-3231-473d-8d15-0480f071368f}