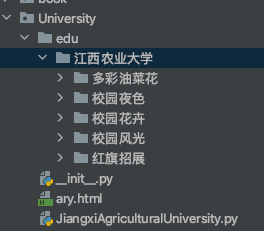

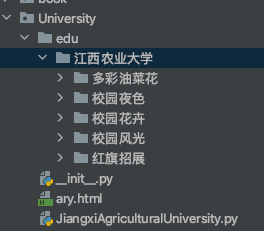

import requestsfrom bs4 import BeautifulSoupfrom hashlib import md5import osclass JXAriUniversity(object): def init(self): self.baseURL = 'http://www.jxau.edu.cn/10/list.htm' self.edu = 'http://www.jxau.edu.cn' self.headers = { 'User-Agent': 'Mozilla/5.0 (Linux; Android 6.0; Nexus 5 Build/MRA58N) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.183 Mobile Safari/537.36' } def respose(self, url): content = requests.get(url, headers=self.headers).content.decode() return content def getRequest(self): content = self.respose(self.baseURL) soup = BeautifulSoup(content, 'lxml') list = soup.select('.news_list li') edu_list = [] for item in list: dict = {} dict['href'] = self.edu + item.select_one('a').get('href') dict['title'] = item.select_one('a').get('title') edu_list.append(dict) for item in edu_list: edu_url = item['href'] edu_title = item['title'] edu_html = self.respose(edu_url) soup_edu = BeautifulSoup(edu_html, 'lxml') image_list = soup_edu.select('.wp_articlecontent img') images = [] for item in image_list: image_url = self.edu + item.get('src') self.saveImage(image_url, 'edu/江西农业大学/{}'.format(edu_title)) def saveImage(self, image_url, file_path): respose = requests.get(image_url) if respose.status_code == 200: data = respose.content try: if not os.path.exists(file_path): print('文件夹', file_path, '不存在,重新建立') os.makedirs(file_path) # 获得图片后缀 file_suffix = os.path.splitext(image_url)[1] # 拼接图片名(包含路径) filename = '{}/{}{}'.format(file_path, md5(data).hexdigest(), file_suffix) with open(filename, 'wb')as f: f.write(data) except IOError as e: print('文件操作失败:' + e) except Exception as e: print('错误:' + e) def run(self): self.getRequest()if name == 'main': JXAriUniversity().run()